In-Depth Guide to Stable Diffusion Inpainting Techniques

Dive into our comprehensive guide on Stable Diffusion Inpainting Techniques. Understand the use of different inpaint masks, learn how to create masks using segmentation, and discover the power of combining inpainting with ControlNet & IP Adapter.

TL;DR: Learn to alter image backgrounds and foreground objects using Stable Diffusion Inpainting. Uncover how segmentation (SAM) aids in creating inpaint masks, enhancing Stable Diffusion Inpainting’s effectiveness. Lastly, see how combining Stable Diffusion Inpainting with ControlNet and IP Adapter yields superior results.

Stable Diffusion Inpainting is a powerful tool for image editing. It’s a technique that uses the concept of diffusion to fill in missing parts of an image in a way that appears natural and seamless.

Inpainting allows you to alter specific parts of an image. It works by using a mask to identify which sections of the image need changes. In these masks, the areas targeted for inpainting are marked with white pixels, while the parts to be preserved are in black. The model then processes these white pixel areas, filling them in accordance with the given prompt.

Changing Background and Foreground Objects with Stable Diffusion Inpainting

Stable Diffusion Inpainting offers a powerful way to manipulate both the foreground and background of an image.

Changing the Background: If you want to preserve the main subject and replace the background, you would inpaint the main subject. This means you create an inpaint mask that covers the main subject. When you apply Stable Diffusion Inpainting, it will fill in the masked area (the main subject) with new content that matches the surrounding area (the background). As a result, a new background is generated while the main subject remains intact.

Changing the Foreground Objects: On the other hand, If you want to preserve the background and replace the subject in the image, you would inpaint the background. In this case, you create an inpaint mask that covers the background. When you apply Stable Diffusion Inpainting, the algorithm will fill in the masked area (the background) with new content that matches the surrounding area (the subject). Consequently, a new subject is generated while the background remains intact.

In both cases, the key is to create an accurate inpaint mask that covers the area you want to replace. The more precise the mask, the better the inpainting results will be.

Mask and Invert Mask in Stable Diffusion Inpainting

In Inpainting, masks play a crucial role. A black and white image is used as a mask for inpainting over the provided image. White pixels are inpainted and black pixels are preserved. They guide Stable Diffusion by defining the regions to be filled or preserved. There are two primary types of masks used in this process: Mask and Invert Mask.

Mask: This is used to specify the areas in an image that need to be inpainted. In other words, the areas covered by the mask are the ones that the Stable Diffusion will fill in based on the text prompts. The areas designated for inpainting are usually marked with white pixels, and the areas to be left unchanged are marked with black pixels.

Invert Mask: Contrary to the mask, an invert mask specifies the areas in the image that need to be preserved. Stable Diffusion will then fill in the areas not covered by the mask. In this mask, The areas designated for inpainting are usually marked with white pixels, and the areas to be left unchanged are marked with black pixels.

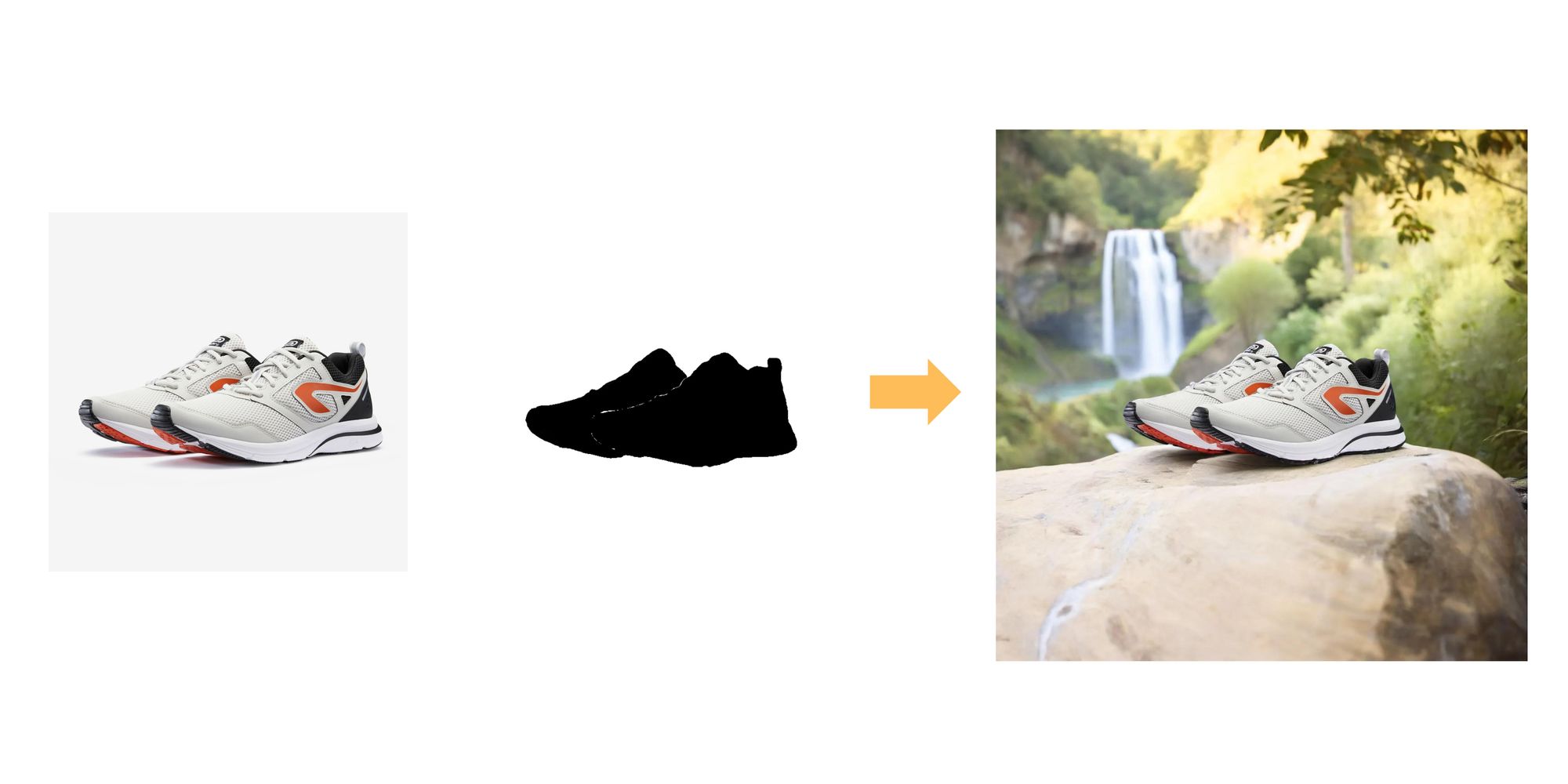

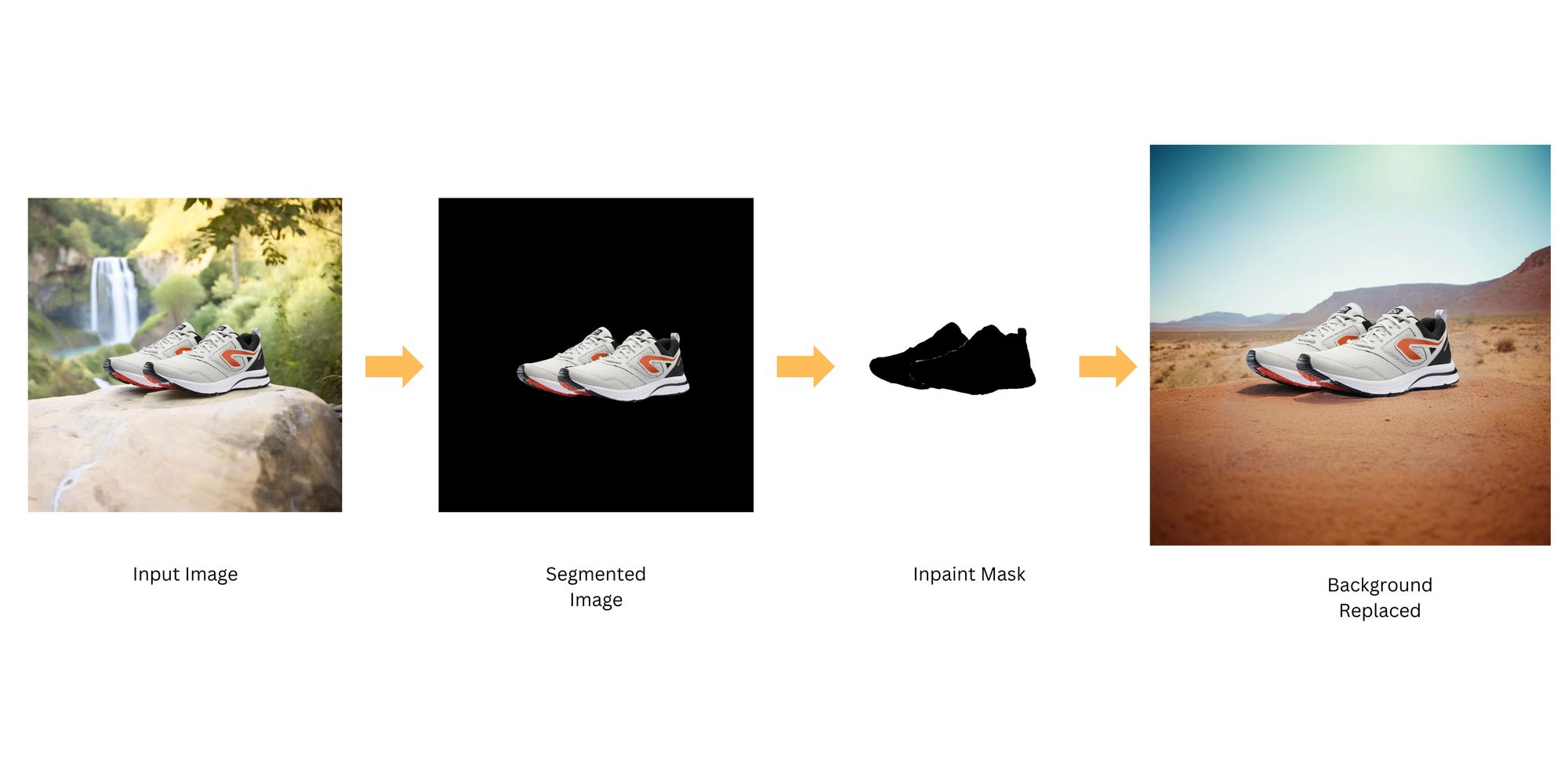

Using Segmentation (SAM) with Inpainting for Background Replacement

By using segmentation models like SAM (Segment Anything Model), you can create more precise and effective inpaint masks, leading to better inpainting results. SAM can be used to separate the object of interest from the rest of the image. Once the object is segmented, it can be used to create an inpaint mask. This mask can then be used in the Stable Diffusion process to preserve the object while inpainting the rest of the image.

- Segment the Object: Use SAM to segment the object of interest from the image. This will create a binary mask where the object of interest is marked.

- Create the Inpaint Mask: Convert the segmented mask into an inpaint mask. If you want to preserve the object and inpaint the background, you can use the segmented mask as an inpaint mask. If you want to inpaint the object and preserve the background, you can use the segmented mask as an invert mask.

- Apply the Inpaint Mask: Use the created inpaint mask in the Stable Diffusion process. The areas covered by the mask will be preserved (in case of an invert mask) or filled (in case of a regular mask) by the inpainting.

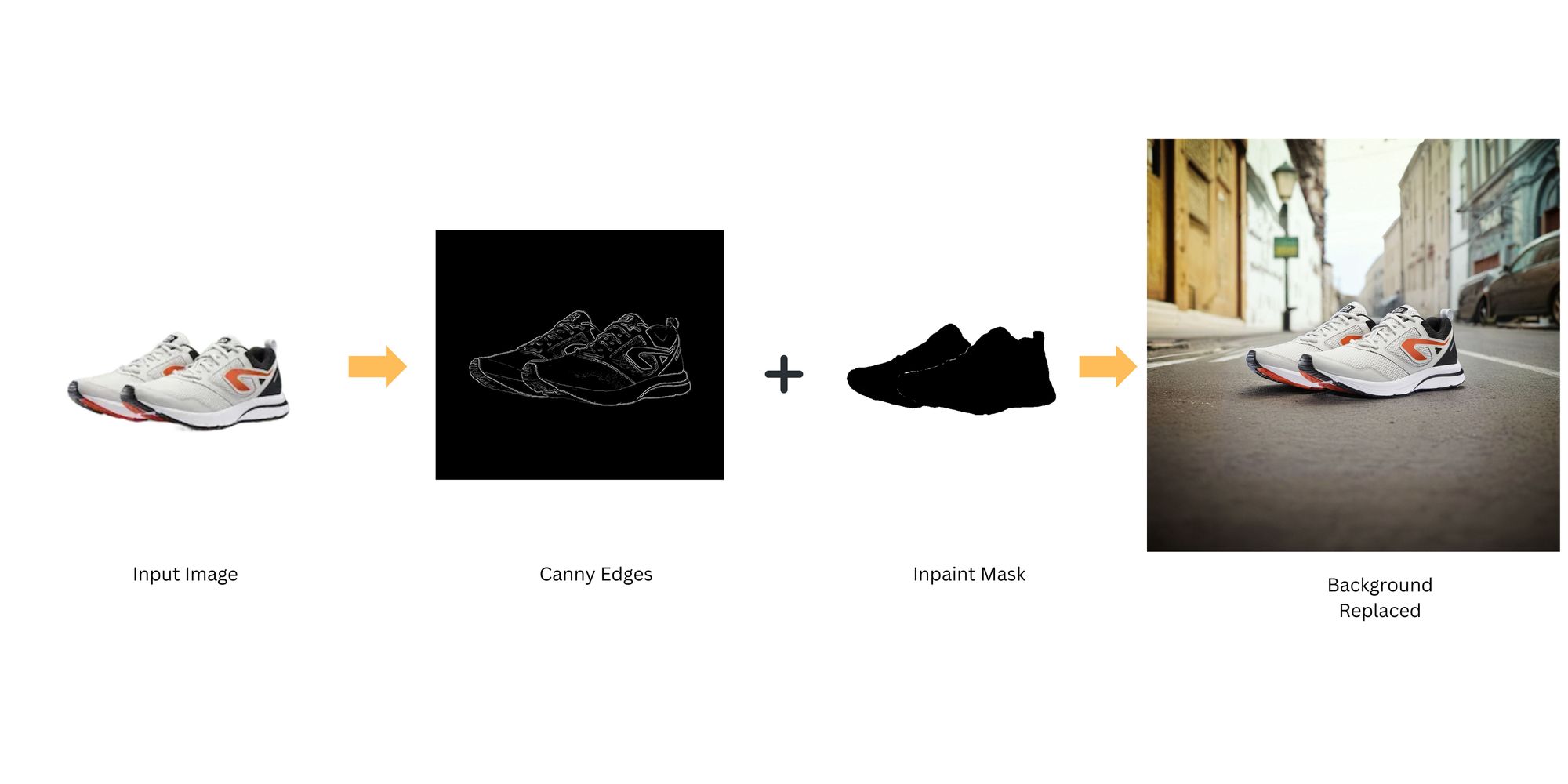

Combining Stable Diffusion Inpainting with ControlNet & IP Adapter

Pairing Inpainting with ControlNet and IP Adapter can significantly enhance the quality of the output images.

ControlNets like Depth or Canny help preserve the composition of the subject, clearly separating the subject from the background and vice versa. This can be particularly useful when you want to maintain the structure of the subject while changing its appearance or the surrounding area.

On the other hand, IP Adapter offers more flexibility by allowing the use of an image prompt along with a text prompt to guide the image generation process. This means you can use an existing image as a reference and a text prompt to specify the desired background. The IP Adapter will then guide the Stable Diffusion Inpainting model to replaces the background of an image that matches the reference image.

*Disclaimer: All generated images featuring brand products used in this blog post are purely illustrative and have been created using AI technology for demonstration purposes only. Any brand names, visuals, logos, and trademarks are the property of their respective owners

Explore Stable Diffusion Inpainting on Segmind. Sign up and get 100 free inference every day.