Creating AI-Generated Product Catalogue: A Step-by-Step Guide to Building Your Own Pipeline

Picture this: you write a simple description of a product, and an AI springs into action, transforming those words into vibrant, lifelike images. This isn't a far-off fantasy, but an exciting reality unfolding in the world of artificial intelligence (AI). In our fast, tech-filled world today, AI has become a part of every business.

In the ever changing retail business, making visual catalogs that beautifully and realistically exhibit product variations poses a big challenge. Take the shoe business for instance, with its many design, color, and style options, is a great example of this challenge.

Building a Product Catalogue:

In this blog post, we aim to look at a way to make top-quality images of shoes straight from text descriptions (prompts). These images can be used in many places like online stores, digital catalogs, and ads.

We don't just stop at making image of one shoe; our aim is to make a lot of different versions of each type of shoe. These versions can vary in color (like red, blue, or neon green), material (like leather, suede, or synthetic), style (like sporty, casual, or formal), and design details (like high-top or low-top, laces or velcro, and more). In this blog post, we'll narrow our focus to exploring color variations.

Key Models for Our AI Image Generation Pipeline:

We will be using two state-of-the-art models for this AI pipeline.

- CyberRealistic is a highly specialized image generation AI model that leverages the robust architecture of the Stable Diffusion (SD 1.5) model. This model went through a lot of fine-tuning using a large dataset of images made by other AI models. The end result is an AI model that can make images very well suited to its specific job - making realistic pictures.

- ControlNet Canny is a neural network which give us more control over what Stable Diffusion models make. It guides the end result by letting us choose certain important features in the picture it makes. These features could be the general shape of the image, the position of the object in the image, or the style of the image, making the results more customized.

Building The Workflow:

The generative AI pipeline for creating photorealistic shoe images from textual descriptions involves two main steps:

- Generation of Base Shoe Image: The first step is the creation of a base image of the shoe using the CyberRealistic model. A base image of an Adidas shoes is generated, with a detailed set of requirements embedded within the below text prompt.

{

"prompt": "Photo of adidas shoes, side view, adidas logo, photorealistic, hyper quality, intricate detail, masterpiece, photorealistic, ultra realistic, maximum detail, foreground focus, instagram, 8k, volumetric light, cinematic, octane render, uplight, no blur, depth of field, dof, bokeh, 8k",

"negative_prompt": "CyberRealistic_Negative",

"samples": 1,

"scheduler": "dpmpp_2m",

"num_inference_steps": 40,

"guidance_scale":10,

"img_height": 768,

"img_width": 512,

"seed":852030512417

}- Prompt: This lengthy and specific prompt includes various characteristics, like brand name and logo, image quality attributes (hyper quality, intricate detail, professional photography look, ultra-realistic representation, high resolution (8k)), and certain lighting and focus conditions (volumetric light, cinematic, octane render, uplight, depth of field, and bokeh).

- negative_prompt: This refers to any specific attributes or characteristics that you do not want to appear in the generated image. If you don't want the model to generate images with a certain feature, you can specify that in the negative prompt.

- samples: This is the number of output images you want the model to generate per prompt.

- scheduler: This parameter specifies the inference scheduling algorithm to use during the model's operation. In this case, "dpmpp_2m" could be the selected scheduler for running this model.

- num_inference_steps: This is the number of inference steps the model will take to generate the output. Higher values can lead to more refined images, but it may take longer and consume more computational resources.

- guidance_scale: This parameter could be responsible for controlling the strength of the guidance from the input prompt. Higher values would result in a stronger emphasis on the input text prompt, possibly leading to a more accurate representation of the prompt in the output image.

- img_height and img_width: These parameters define the resolution of the output image. In this case, the output images will be 768 pixels in height and 512 pixels in width.

- seed: This is a random seed number that is used to initialize the random number generator for the model. This seed number ensures that the model will generate the same output if it is run with the same inputs and parameters in the future. It's a useful tool for reproducibility.

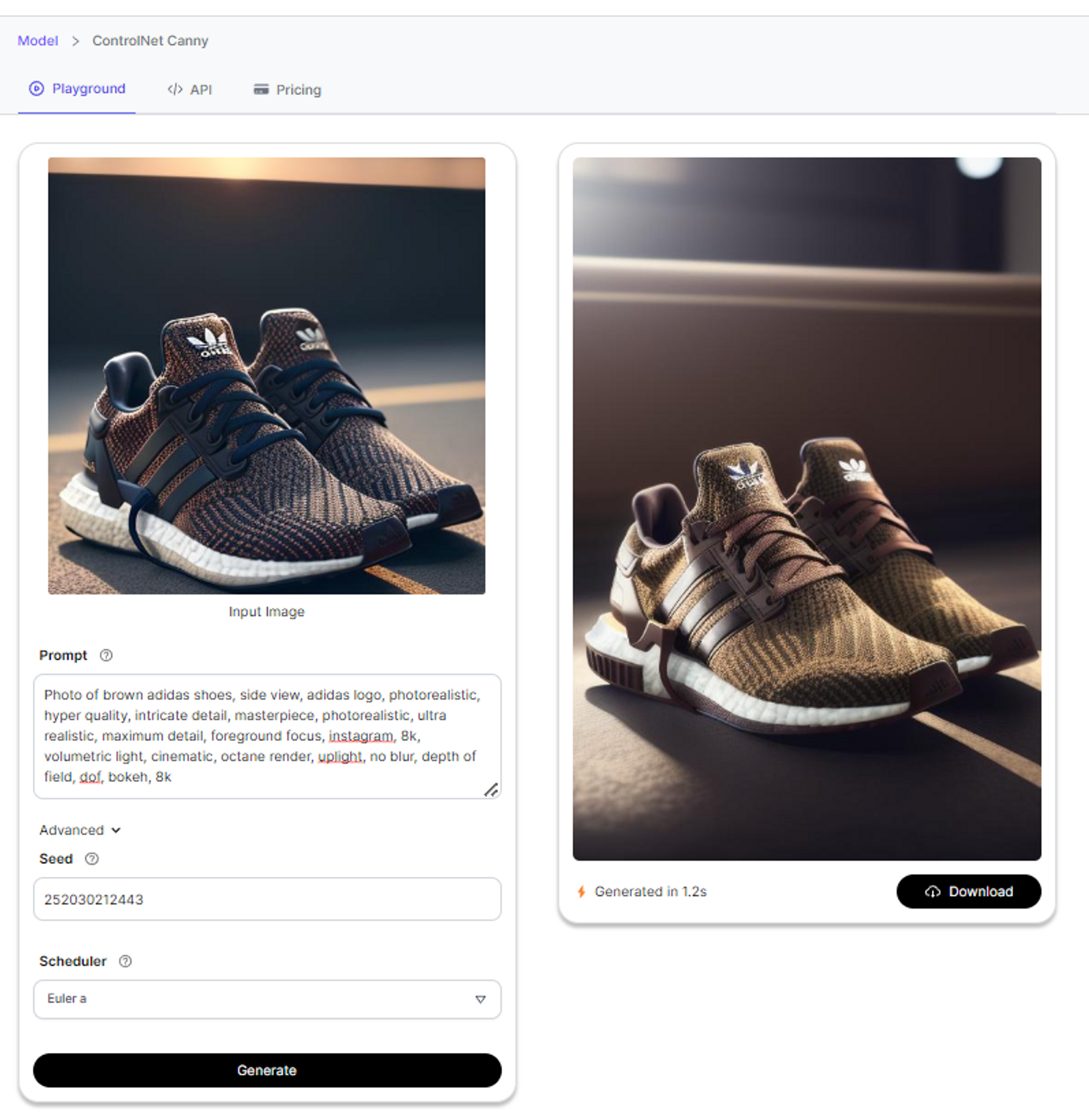

2. Creation of Color Variations: Once the base image of the shoe is generated, the next step is to create various color variations of the same shoe design. This is achieved through the use of the ControlNet Canny model. It allows us to specify desired features—in this case, different color attributes—to be applied to the base image. This way, we can produce multiple color variants of the same shoe design.

By providing the ControlNet Canny model with specific prompts for different colors, we can generate a variety of color variations for our base shoe image. For instance, we could specify colors like 'brown', pastel' or 'yellow', and the ControlNet Canny model will generate new images of the shoe in these colors.

In essence, the pipeline leverages the individual strengths of two advanced AI models to achieve its objective of generating photo realistic images of shoes and their multiple color variations.

Expanding the Scope:

While our discussion in this blog is centered around generating color variations of shoes using our AI pipeline, the applications of the models and techniques discussed extend far beyond just color. Here are some other exciting possibilities for you to explore:

- Material Variations: Just like with color, you can experiment with generating images of shoes made from different materials. The same shoe design could be visualized in leather, suede, canvas, or any other material you can think of.

- Style Variations: By modifying the textual description input, you can generate images of the same shoe in a variety of styles such as athletic, casual, formal, or anything in between.

- Design Details: Design details like high-top or low-top, laces or velcro, and more can significantly alter the look and feel of a shoe.

Visit Segmind today and sign up for a free account to create your own product images and experiment with variations.

*Disclaimer: All generated images featuring brand products used in this blog post are purely illustrative and have been created using AI technology for demonstration purposes only. Any brand names, visuals, logos, and trademarks are the property of their respective owners.