Understanding Compute Requirements for LLMs and Diffusion Models

This blog post explores the key differences in compute requirements between Large Language Models and Diffusion models. It discusses the trade-off between model complexity and resource requirements and their applications in real-time performance and interactive applications.

Large Language Models (LLMs) and Diffusion Models are two prominent types of deep learning models, each with distinct characteristics and applications. LLMs are known for their incredible applications in text modality while diffusion models in the imaging and video modality. At a technical level, LLMs have high parameter count, which enables them to learn complex patterns but also necessitates substantial computational power and VRAM, making them suited for enterprise settings and cloud-based infrastructure. In contrast, diffusion models, with their lower parameter counts, require less computational power and VRAM, making them more accessible for use on consumer-grade hardware. This accessibility makes diffusion models ideal for a wide range of creative applications, particularly those requiring real-time performance and lower latency. The predictability of compute needs in LLMs contrasts with the variability found in diffusion models, reflecting their differing use cases and operational complexities. Let's look at the technical differences in these models in detail.

Parameter Count

LLMs are typically characterized by a high parameter count. The parameters in these models are essentially the "neurons" that learn the patterns from the training data, and they determine the behavior of the model. The more parameters a model has, the more complex patterns it can learn.

Typically, LLMs parameter count range between 7 billion to 300-400 billion. Although, now we see emergence of SLMs (small language models) that have parameter count under 3 billion. The high parameter count in LLMs translates into more computational power and VRAM requirements. Furthermore, once the model is trained, inferencing on the model also requires a substantial amount of VRAM, as the parameters need to be loaded into memory.

Diffusion models typically have lower parameter counts compared to LLMs. The largest open source diffusion model SDXL, has 2.6 billion parameters and it is already the SOTA model in open source. Because of their lower parameter count, they require less computational power and VRAM to train and use. This lower resource requirement makes diffusion models more accessible for a wider range of consumer hardware like NVIDIA 3060, 3090 or 4090 devices. Creatives and enthusiasts can typically run these SOTA imaging models on their local GPUs as compared to LLMs that require enterprise grade GPUs like A100s or H100s.

VRAM Requirements

LLMs such as the state-of-the-art open-source model LlaMA with 70 billion parameters, require significant computational resources and memory. These models often exceed the capabilities of local or consumer-grade GPUs due to their high computational and memory requirements. Running these models typically requires more than 50GB of VRAM. While there are versions of these models that can be quantized and run on lower-end hardware, this often results in a degradation of quality and slower processing times. This is because quantization reduces the precision of the model’s parameters, which can affect the model’s performance.

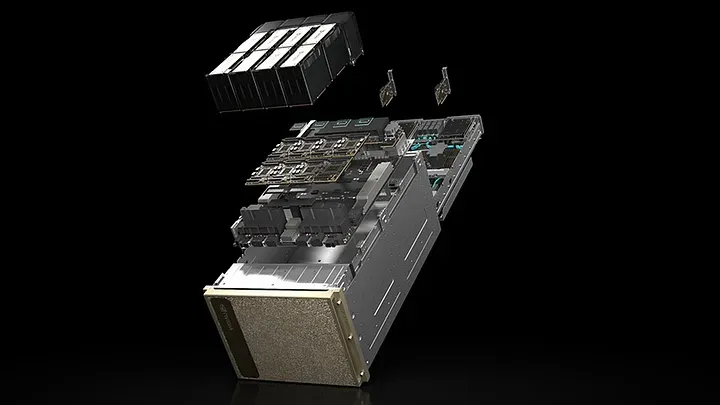

LLMs are generally used in enterprise settings due to their high resource requirements. These models are often run on cloud-based infrastructure, which provides several advantages. First, cloud-based infrastructure allows for greater scalability. As the computational needs of the model increase, additional resources can be easily allocated on cloud. Second, cloud-based infrastructure provides access to high-performance enterprise GPU hardware, which is necessary to run these resource-intensive models.

Diffusion models, such as the state-of-the-art model SDXL, have fewer parameters and thus lower hardware requirements. SDXL, for example, has 2.6 billion parameters and can fit on a consumer-grade GPU with less than 12-16 GB of VRAM. Earlier SOTA models like SD1.5 can be run on GPUs with less than 6GB VRAM. This makes it more accessible for enthusiasts and developers with consumer-grade hardware. We see that diffusion models are often run on these local machines without the need for high-end cloud infrastructure. The lower resource requirements of diffusion models make them ideal for a variety of creative applications that can be developed locally.

Predictability of Latency & Compute Needs

Although LLMs are very compute intensive, their latencies and the compute requirements are predictable. LLMs take text input and output text, generally known as Tokens. Each model has a certain token length that can be input, known as context window. The length of the context window determines the VRAM required for each of these models. Typically the context window ranges from 16k to 128k, but we're now seeing more models come up with larger context windows of up to 2M. Although larger context windows take more VRAM, the output generation time is not linearly correlated and the latencies are very predictable.

Diffusion models on the other hand have more variety and classes of models, leading to a large range of latencies and compute requirements. The compute and latencies are directly correlated to the input sizes and the model complexity. For example, a diffusion model used for simple text-to-image generation will have different resource requirements compared to models that have additional control parameters, like depth, pose etc. Furthermore, the size and complexity of the input and output can also affect the latency and compute requirements. For instance, generating a high-resolution image would require more resources and take longer than generating a low-resolution image. Some models have multiple image inputs that linearly increase the generation time as well. This variability can make it more challenging to estimate resource requirements and performance for diffusion models. It requires a more in-depth understanding of the specific model and use case, and may require empirical testing to get accurate estimates.

Real-Time Performance

LLMs are performant models that can produce fast outputs. However, they are not typically capable of real-time performance. Real-time performance refers to the ability of a system to instantly process and respond to inputs, within few milli-seconds. In the case of LLMs, this would mean generating output while the input is being given. This is not a typical use case of LLMs. QA format is the biggest use case of LLMs, where the question needs to be completed before outputting an answer.

Diffusion models, in contrast, are increasingly being optimized for real-time performance. This is a significant development, as it allows for interactive applications and more immediate feedback. For example, a diffusion model could be used in an image editing application to apply effects in real-time as the user adjusts parameters. Applications like Krea ai have demonstrated this excellent use case of image editing in real-time. The ability to provide immediate feedback makes diffusion models particularly useful for interactive applications where the user’s input can change dynamically every few milliseconds.

In summary, Large Language Models (LLMs) and Diffusion Models are two prominent deep learning models with distinct characteristics. LLMs have high parameter counts, requiring substantial computational power and VRAM, making them suited for enterprise settings. Diffusion models have lower parameter counts, requiring less computational power and VRAM, making them accessible for consumer-grade hardware. LLMs are used in text modality, while diffusion models are used in imaging and video modality. Diffusion models are ideal for real-time applications, while LLMs are not typically capable of real-time performance. The predictability of compute needs in LLMs contrasts with the variability found in diffusion models.