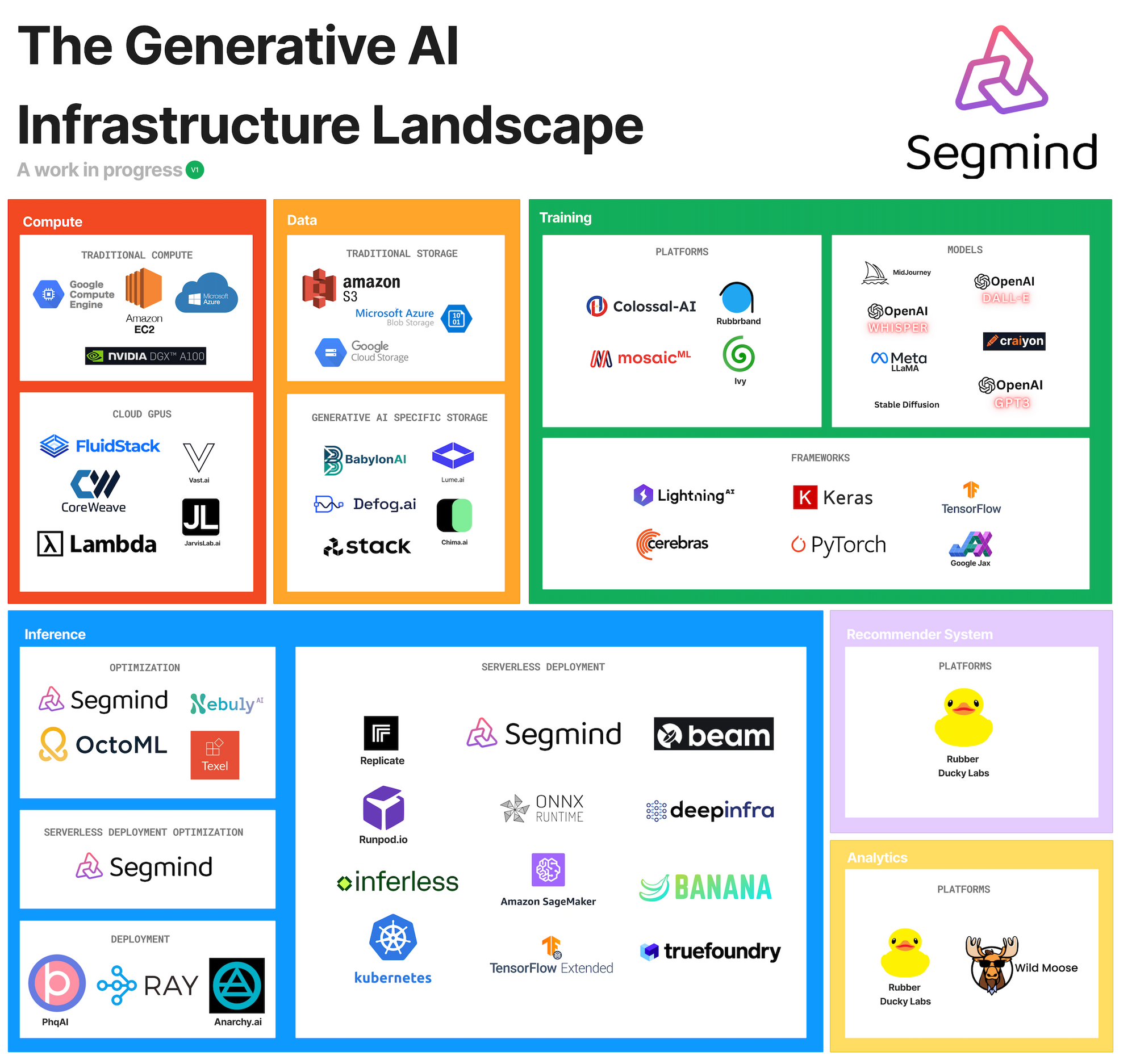

The Generative AI Infrastructure Landscape by Segmind

Dive into the Generative AI Infrastructure landscape and explore what traditional tools have been customized to meet Generative AI's needs. Plus, get a look at emerging startups building infrastructure.

Generative AI infrastructure is quietly powering application companies to reach greatness with Generative AI.

Similar to picks and shovels sold to gold miners during the gold rush Generative AI infrastructure companies are powering application companies to achieve rapid innovation and value unlock with Generative AI use cases.

However, it pays to know the tools that will help you and your enterprise create value with AI. This is why we have scoured the entire Generative AI landscape to help you find the tools you need.

Mapping the Generative AI landscape

As we see it, the Generative AI landscape can be divided into five core areas: Compute, Data, Training, Inference, Recommender Systems, and Platforms. The landscape includes traditional tools that have been customized to meet the needs of Generative AI. In addition, there are emerging Generative AI startups building infrastructure specifically to meet the requirements of Generative AI.

From our perspective at Segmind, the Generative AI infrastructure landscape will experience the rapid creation of dozens of new startups. These startups will be built for the specific requirements and complexities of Generative AI use cases that existing infrastructure companies either do not service or do not service very well.

On the other hand, some of the companies currently focused on Generative AI infrastructure will see rapid adoption and success because they are in the market first or near first and have the right market timing to address the existing pain points Generative AI teams are currently experiencing.

How Generative AI infrastructure will evolve

Generative AI infrastructure has a parallel to the now mature MLOps space that over the past eight years witnessed the rapid creation of startups that became multi-billion dollar scale-ups like Scale.ai, Weights & Biases, and Dataiku. At the beginning of MLOps, only a handful of companies existed with minuscule amounts of revenue generated from the space as a whole. Currently, it is likely that most existing and new Generative AI infrastructure startups are under $10m in revenue except for a few outliers, but not for long. By addressing the specific needs of Generative AI application companies across the five core areas listed in our landscape, some of the startups identified in the Generative AI infrastructure landscape will experience explosive growth.

Segmind is seeing that Generative AI startups are choosing to buy rather than build their capabilities across all six categories in the Generative AI infrastructure landscape. Additionally, we are seeing that much of the growth in Generative AI use cases are still ahead of us, indicating that the implementation of Generative AI infrastructure is still in its early stages.

Description and links of Generative AI Infrastructure companies highlighted

Compute

Traditional Compute

- Google Compute Engine Compute Engine delivers configurable virtual machines running in Google's data centers with access to high performance.

- Amazon EC2 The broadest and deepest compute platform, with over 500 instances and choice of the latest processor, storage, networking, operating system, and purchase model to help you best match the needs of your workload.

- Microsoft Azure Invent with purpose, realize cost savings, and make your organization more efficient with Microsoft Azure's open and flexible cloud computing platform.

- NVIDIA DGX NVIDIA DGX Systems deliver the world's leading solutions for enterprise AI development at scale. Inspired by the demands of AI.

Cloud GPUs

- FluidStack.io The Airbnb of GPU Compute. Data centers at 5x better prices.

- CoreWeave CoreWeave is a specialized cloud provider, delivering a massive scale of GPUs on top of the industry’s fastest and most flexible infrastructure.

- Lambda Labs GPU cloud is built for deep learning. Instant access to the best prices for cloud GPUs on the market. Save over 73% vs AWS, Azure, and GCP. Configured for deep learning with PyTorch®, TensorFlow, Jupyter

- Vast.ai

The market leader in low-cost cloud GPU rental.Use one simple interface to save 5-6X on GPU compute. - Jarvislabs.ai Rent GPU. Train and deploy AI, ML and DL models in few clicks trusted by over 10,000 ML practitioners.

Data

Traditional Storage

- Amazon Simple Storage Service (Amazon S3) An object storage service offering industry-leading scalability, data availability, security, and performance.

- Microsoft Azure Blob Storage helps you create data lakes for your analytics needs, and provides storage to build powerful cloud-native and mobile apps.

- Google Cloud Storage lets you store data with multiple redundancy options, virtually anywhere.

Generative AI Specific Storage

- Lume.ai No-code tool to generate and maintain custom data integrations. Lume uses AI to automatically transform data between any start and end schema, and pipes the data directly to your desired destination.

- Defog.ai ChatGPT for your company's data. Let your users ask free form data questions through large language models embedded in your app

- Chima.ai Companies struggle to customize their generative AI models by taking advantage of their existing customer and enterprise data, in real-time. Chima solves for this through a sleek, interoperable layer before the standard generative AI models are applied.

- Stack.ai AI-Powered Automation for Enterprise.Fine-tune and compose Large Language Models to automate your business processes.

Training

- Colossal.ai Unmatched speed and scale. Learn about the distributed techniques of Colossal-AI to maximize the runtime performance of your large neural networks.

- Mosaic.ml The MosaicML platform enables you to easily train large AI models on your data, in your secure environment.

- Rubbrband ML Training in 1 Line of Code. Rubbrband is a CLI that enables training of the latest ML models in a single line of code.

- Ivy Unified Machine Learning. Unify all ML frameworks pip install ivy-core.

Frameworks

- Pytorch Lighting PyTorch Lightning is the deep learning framework for professional AI researchers and machine learning engineers who need maximal flexibility without sacrificing performance at scale. Lightning evolves with you as your projects go from idea to paper/production.

- Cerebras The fastest AI accelerator, based on the largest processor in the industry, and made it easy to use. With Cerebras, blazing-fast training, ultra-low latency inference, and record-breaking time-to-solution enable you to achieve your most ambitious AI goals.

- Keras Consistent & simple APIs that minimize the number of user actions required for common use cases with clear & actionable error messages.

- PyTorch An open-source machine learning framework that accelerates the path from research prototyping to production deployment.

- Tensorflow Open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks.

- Google Jax Machine learning framework for transforming numerical functions. It is described as bringing together a modified version of Autograd and TensorFlow's XLA.

Inference

Optimization

- Segmind Serverless Optimization Platform For Generative AI. The first serverless optimization platform that increases inference speed by up to 5x for Generative AI.

- Nebuly.ai Optimize AI compute cost and performance in one place

- OctoML Optimize and package your trained model in minutes so you can deploy it to any hardware target for faster, more cost-efficient inference.

- Texel.ai Accelerate your cloud GPU workloads by optimizing scaling, deployment, and latency. You can run a generative AI model or encode a video on our service up to 10x faster than standard solutions while cutting costs by up to 90% and reducing engineering risk.

Serverless Deployment

- Replicate Run machine learning models with a few lines of code, without needing to understand how machine learning works. Use our Python library:

- Segmind Serverless Optimization Platform For Generative AI. The first serverless optimization platform that increases inference speed by up to 5x for Generative AI.

- Beam Cloud Develop on remote GPUs, train machine learning models, and rapidly prototype AI applications — without managing any infrastructure.

- Runpod.ai GPU Cloud. Scalable infrastructure built for production. Rent Cloud GPUs from $0.2/hour.

- ONNX Runtime Speed up the machine learning process. Built-in optimizations that deliver up to 17X faster inferencing and up to 1.4X faster training

- Deep Infra Run the top AI models using a simple API, pay-per-use. Low-cost, scalable, and production-ready infrastructure.

- Inferless Serverless GPU for ML models is the fastest way to deploy production-ready ML. No hassle of managing servers, DevOps cost, no favors required.

- Amazon Sagemaker Machine Learning for Every Developer and Data Scientist. Try Today with AWS. Get Machine Learning Models into Production Quickly with Amazon SageMaker.

- Banana.Dev: Scale your machine learning inference and training on serverless GPUs.

- Kubernetes, also known as K8s, is an open-source system for automating the deployment, scaling, and management of containerized applications.

- TensorFlow Extended Build and manage end-to-end production ML pipelines. TFX components enable scalable, high-performance data processing, model training, and deployment.

- TrueFoundry The fastest framework for Post model Pipeline. Instant monitored endpoints for models in 15 minutes with the best DevOps practices.

Serverless Deployment Optimization

- Segmind Serverless Optimization Platform For Generative AI. The first serverless optimization platform that increases inference speed by up to 5x for Generative AI.

Deployment

- PYQ Pyq is an easy and affordable way to integrate machine learning into your application. We help developers skip all the cloud infrastructure and setup, and get straight to the most important part: leveraging ML in their apps.

- Ray.io Open-source unified compute framework that makes it easy to scale AI and Python workloads — from reinforcement learning to deep learning to tuning, and model serving. Learn more about Ray’s rich set of libraries and integrations.

- Anarchy.ai AGIs for your APIs: Infrastructure for customizing LLMs with agency

Recommender System

- Rubber Ducky Labs Better recommender systems with machine learning plus human expertise

Analytics

- Rubber Ducky Labs Better recommender systems with machine learning plus human expertise

- Wild Moose Jobs

Helps on-call developers more quickly identify the source of production incidents. We do this by providing a conversational AI trained on their environment. The LLM-based AI is tailor-made to accurately answer questions about production information such as logs and metrics.

Thank you for reading we will provide updates on the Generative AI Infrastructure as the space evolves. Follow us for more updates on Generative AI!