Stable Diffusion with Zero Shot Learning for Image Transformation

Explore the power of Stable Diffusion in image generation and transformation. Learn how it combines with zero-shot learning to modify images based on textual prompts, without the need for specific task training.

Stable Diffusion is a powerful tool for image generation and when combined with zero-shot learning, it can be used for interesting image transformation tasks. The process begins with noise and iteratively refines it towards an image that aligns with the text prompt. This enables the model to perform tasks without specific training for that task. You can provide Stable Diffusion with an existing image you want to transform and craft a text prompt describing the desired change. Stable Diffusion uses the image and the prompt to steer the image generation process towards the desired outcome. This approach allows you to modify various aspects of an image like styles, colors, material, or even composition. The best part is that there’s no need for retraining the model for specific transformations.

In this blog post, we will explore a few examples of zero shot learning for image generation with Stable Diffusion.

Material Transfer

Material Transfer is based on zero-shot material transfer to an object in an input image given a material exemplar image. For example, given a subject image (e.g., a photo of an bird) and a single material example image (e.g., marble), Material transfer model can transfer the marble material from the material image onto the bird.

This process allows for the realistic transfer of material properties from one image to another, even when the two images have different structures and lighting conditions. It’s a powerful tool in image editing.

Under the hood of Material Transfer is a combination of IP Adapter, ControlNet Depth and Inpainting.

- An image encoder (IP Adapter) understands unique characteristics of a certain material and captured from a reference image. This image is known as the material image.

- ControlNet Depth: In this step, the input image, which is the image onto which the material properties will be transferred, is processed to understand its structure and lighting conditions. The structure is understood by estimating the depth of different parts of the image.

- Inpainting: The final step is where the extracted material properties are applied to the input image. This is done in a way that takes into account the structure and lighting conditions of the input image, resulting in a new image where the material properties from the material image have been realistically transferred.

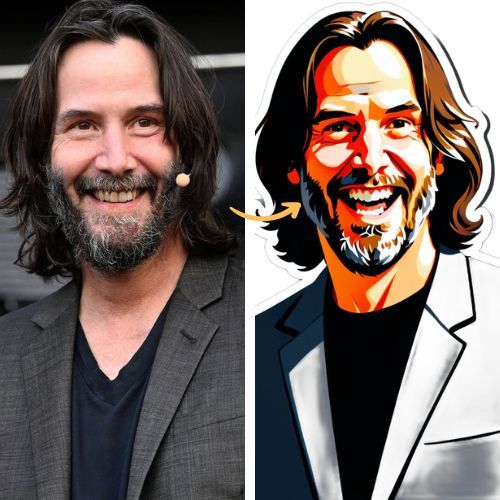

Face to Sticker

Face to sticker model takes an image of a person and creates a sticker image. This is based on style transfer, where essentially the sticker style is created for an input image of a person. The output is a new image that looks like a sticker but retains the facial features of the person in the input image. This model helps in the creation of personalized stickers from just about any image of a person.

Under the hood of Face to sticker model is a combination of Instant ID, IP Adapter, ControlNet Depth and Background removal.

- Instant ID is responsible for identifying the unique features of the face of the person in the input image.

- An image encoder (IP Adapter) helps in transferring the sticker style on to the face image of the person in the input image.

- ControlNet Depth estimates the depth of different parts of the face. This helps in creating a 3D representation of the face, which can then be used to apply the sticker style in a way that looks natural and realistic.

- Background Removal removes the background, resulting in a clean sticker image.

Insta Depth

Insta Depth generates new images that very closely resemble a specific person and also allowing for different poses and angles. It achieves this using a single input image of the person and a text description of the desired variations, along with a reference image. The key aspect of Insta Depth involves transferring the composition of the person’s image into different poses based on the face image of the person. This ensures that the generated image maintains the unique identity of the person while allowing for variations in pose. This model is an add-on and improvement to InstantID model.

Insta Depth is a combination of Instant ID and ControlNet Depth models. Here is how it works:

- ID Embedding in InstantID, analyzes the input image to capture the person's unique facial features, like eye color, nose shape, etc. It focuses on these defining characteristics (semantic information) rather than the exact location of each feature on the face (spatial information).

- Lightweight Adapted Module in InstantID, acts like an adapter, allowing the system to use the reference image itself as a visual prompt for the image generation process. The reference image can be any pose image.

- IdentityNet in InstantID takes the information from the ID embedding (facial characteristics) and combines it with the text prompt to create a new image.

- ControlNet Depth enables composition transfer by understanding the depth of the input face image. It accurately preserves the person’s face in the new pose (reference image) in the output image.

In conclusion, Stable Diffusion, when combined with zero-shot learning, emerges as a potent tool for image generation and transformation. Through the exploration of examples in this blog post, we’ve seen the immense potential of zero-shot learning for image generation with Stable Diffusion.

Explore these models on Segmind. Get 100 free inference every day.