A Beginner's Guide to ControlNet SoftEdge

ControlNet SoftEdge revolutionizes diffusion models by conditioning on soft edges, ensuring fundamental features are preserved with a seamless blend.

In this blog post, we will take a look at ControlNet SoftEdge.Going beyond ordinary contours, this technology refines traditional diffusion models by highlighting essential features while gently minimizing brush strokes. The result? A captivating visual experience marked by depth and subtlety.

At its core, ControlNet SoftEdge features a detailed neural network structure crafted to condition diffusion models with Soft edges. We will take a look at the architecture of ControlNet before diving into soft edges and how it helps in improving the existing image.

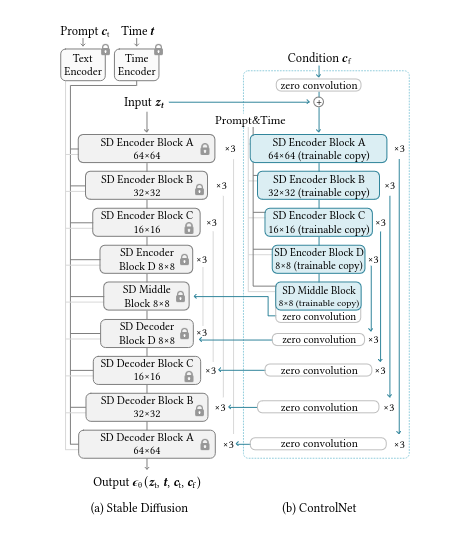

The architecture of ControlNet

The ControlNet architecture is designed to capture a wide range of conditional controls using a unique method called "zero convolutions." This involves initializing convolution layers with zeros.

As these layers gradually develop parameters from zero, they create a controlled and noise-free environment during fine-tuning. This innovative approach acts as a safeguard against disruptive noise, preserving the integrity of the pre-trained model. Notably, it allows for the smooth integration of spatial conditioning controls into the overall model structure.

Now, let's explore how ControlNet SoftEdge comes into play.

What is Controlnet SoftEdge?

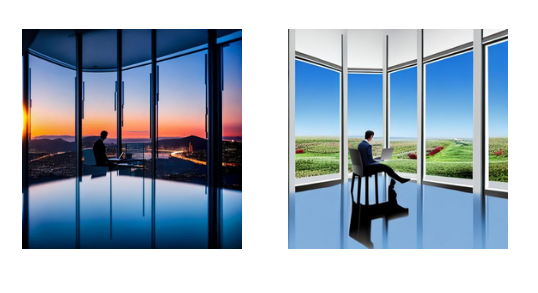

ControlNet Soft Edge is a special variant of ControlNet, focusing on extracting edges from images to craft a sketch-like representation. It can extract line drawings from diverse patterns. The selective extraction process ensures the preservation of intricate details, unaffected by noise.

ControlNet SoftEdge extracts the contours lines, and edges present in the input image and then proceeds to colorize it to generate the final image. This precision guarantees that the final representation maintains its integrity, capturing the essence of the original image with unparalleled clarity.

Thus we get to work with a model that provides precision and control over the finer details of the existing image.

Parameters in Controlnet SoftEdge

Steps

This parameter is about how many times the model cleans up noise to make better images. The process starts with a hint of random noise derived from the test. As the model traverses through a cycle of refinement, it progressively cleans up this noise, elevating the image quality with each iteration.

Guidance Scale

The guidance scale is crucial in making sure our generated images match the given text prompts. By adjusting the "guidance_scale" parameter we can exercise control over the extent to which the generated content aligns with specific criteria.

Negative Prompt

Negative prompts allow users to define the kind of image they would not like to see while the image is generated without providing any input. These prompts serve as a guide to the image generation process, instructing it to exclude certain elements based on the user-provided text.

By utilizing negative prompts, users can effectively prevent the generation of specific objects, and styles, address image abnormalities, and enhance overall image quality.

Strength

This parameter determines the magnitude of transformation imparted upon the reference image. It wields influence over the degree and extent of alterations or modifications applied during the model's processing and grants users precision in tailoring the output according to their creative vision.

Scheduler

In the context of the Stable Diffusion pipeline, schedulers are algorithms that work alongside the UNet component. The main job of these schedulers is crucial to the denoising process, which happens step by step. These steps are super important for turning a random noisy image into a high-quality image. The schedulers' job is to systematically get rid of noise from the image, creating new data samples along the way.

Generating Images

To initiate the image creation process using the ControlNet SoftEdge API from Segmind, follow this step-by-step workflow:

- POST Generate API: Utilize the API to submit a request for image generation

In Bash:

curl -X POST \

-H "x-api-key: YOUR API-KEY" \

-H "Content-Type: application/json" \

-d '{"image":"toB64('https://segmind.com/soft-edge-input.jpeg')","samples":1,"prompt":"royal chamber with fancy bed","negative_prompt":"Disfigured, cartoon, blurry, nude","scheduler":"UniPC","num_inference_steps":25,"guidance_scale":7.5,"strength":1,"seed":131487365682,"base64":false}' \

"https://api.segmind.com/v1/sd1.5-controlnet-softedge"In Python:

import requests

from base64 import b64encode

def toB64(imgUrl):

return str(b64encode(requests.get(imgUrl).content))[2:-1]

api_key = "YOUR API-KEY"

url = "https://api.segmind.com/v1/sd1.5-controlnet-softedge"

# Request payload

data = {

"image": toB64('https://segmind.com/soft-edge-input.jpeg'),

"samples": 1,

"prompt": "royal chamber with fancy bed",

"negative_prompt": "Disfigured, cartoon, blurry, nude",

"scheduler": "UniPC",

"num_inference_steps": 25,

"guidance_scale": 7.5,

"strength": 1,

"seed": 131487365682,

"base64": False

}

response = requests.post(url, json=data, headers={'x-api-key': api_key})

print(response)Let’s take a look at a few images that are transformed using ControlNet SoftEdge

Prompt : A Japanese woman standing behind a garden, illustrated by Ghibli Studios

Prompt : streets of Tokyo , well defined , aesthetic , symmentrical

Prompt : A guardian goes in the sun towards the sun on a path into the future in winter, mystical, Christmas.

We'll dive into the intricacies of three captivating use cases of ControlNet Softedge in another blog post, but here is quick gist of what can be achieved using this model.

1. Texture, Color, and Material: ControlNet SoftEdge serves as a dynamic tool for altering the texture, color, and appearance of materials. Whether you're looking to refine the subtleties of a surface or completely transform its visual identity, this feature provides an unparalleled level of control.

2. Real-Life to Illustration Conversion: Seamlessly convert real-life images into captivating illustrations, and vice versa. It opens doors to a realm where the boundaries between reality and artistic representation blur, offering a unique perspective to your visual narratives.

3. Vibrant Enhancements to Line Drawings: Elevate your creative expressions by adding vibrant color to line drawings.

Conclusion

Segmind’s ControlNet SoftEdge model is your go-to for elevating your image enhancement game. It goes beyond the ordinary, emphasizing feature preservation and toning down brush strokes for visuals that are not only captivating but also deep and subtle.