A Step-by-Step Guide to Mastering the Segment Anything Model (SAM)

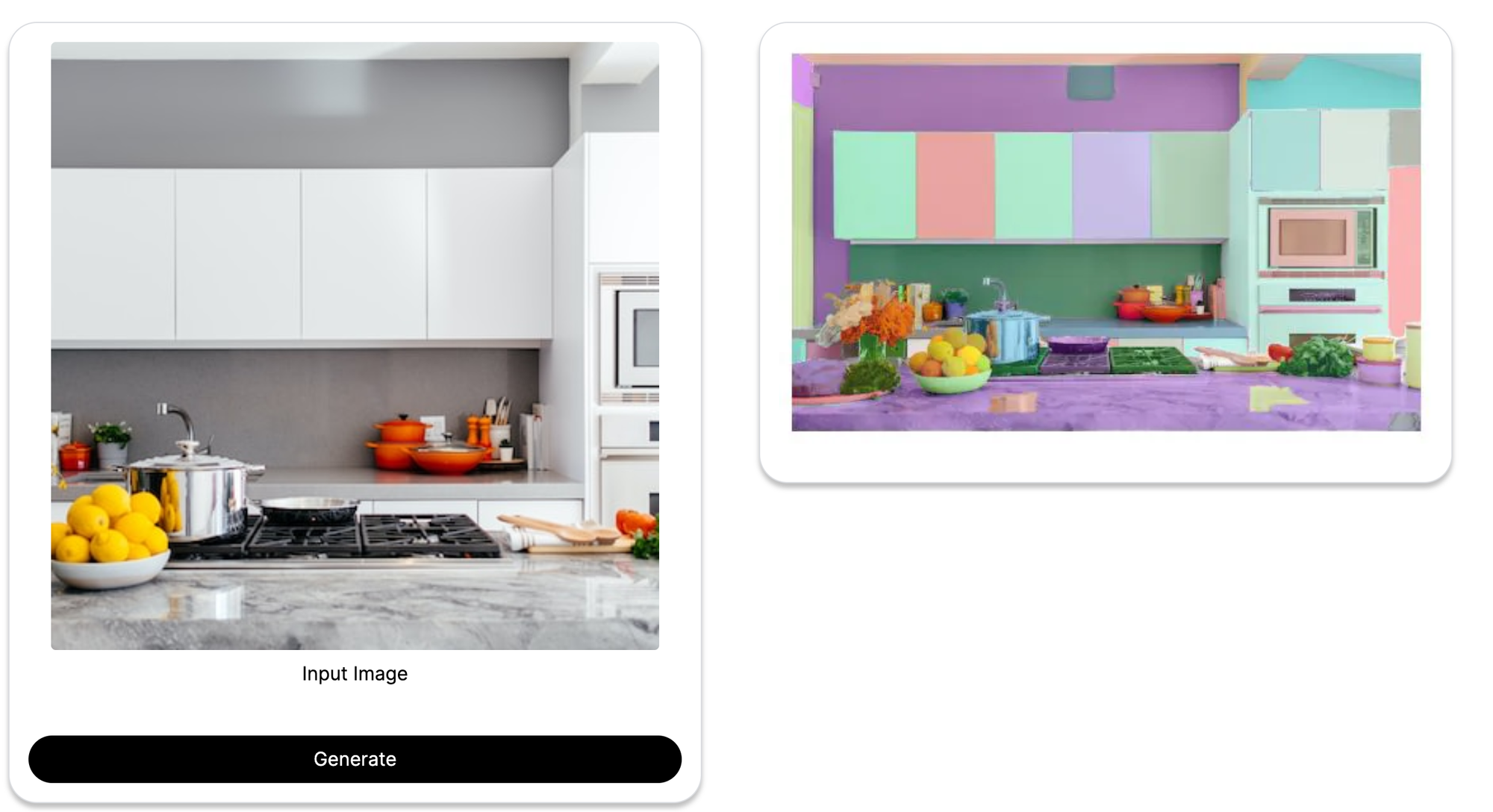

Learn how the Segment Anything Model from Meta works, and how you can get started and use the model to segment anything from animals to medical images.

The world of computer vision advanced a lot in the last decade particularly because of the development of powerful deep learning models. One such model is the Segment Anything Model or, SAM.

SAM was first introduced in 2021 by a FAIR team of researchers at Meta AI. The model was trained to segment objects of interest in any visual data. It can identify and segment or “cut out” a wide variety of objects, including people, animals, vehicles, and objects in the natural world.

SAM under the Hood

What stands out with SAM is its ability to learn through zero-shot generalisation, one of the first large-scale models to do so. The team at Meta achieved this by building a “data engine” just to train this mode since there were no web-scale segmentation datasets large enough to train the model for zero-shot learning.

The model can achieve results through two techniques

- Vision Transformer (ViT): ViT is a type of DL model that is designed for image understanding. ViT models can learn the relationships between different parts of an image, which allows them to segment objects with high accuracy.

- Prompt-based segmentation: SAM also uses prompt-based segmentation, which means that the user can provide the model with a text prompt that describes the object they want to segment. For example, the user could provide the prompt "segment the cat in this image" and the model would be able to segment the cat in the image.

Given below is a very high-level summary of the steps shown above.

- The model first takes an image as input.

- It uses the ViT model to extract features from the image.

- The model then uses the prompt-based segmentation approach to segment objects in the image.

- The model then outputs a mask that indicates the pixels that belong to each object.

- The process was repeated for over 1B masks from 11M licensed and privacy-preserving images

Advantages of SAM

- High accuracy: SAM has been shown to have outstanding accuracy at segmenting objects in images. In the inaugural paper, the SAM model was able to achieve an accuracy of 88.7% on the Cityscapes dataset, which is one of the best-known benchmark datasets for image segmentation.

- Versatility: SAM is widely known for being able to segment a variety of objects, including people, animals, vehicles, and objects in the natural world.

- Ease of use: Even for ML developers who are not familiar with image segmentation, SAM is relatively easy to use.

Applications and Getting Started

Since its release, SAM has been used for a variety of purposes, including:

- Object tracking: SAM is ideal for tracking objects in images and videos. This can be used for tracking the movement of people or animals in CCTVs or tracking the progress of a manufacturing process in an industrial setting.

- Image editing: The most obvious application for SAM is that it can be used to edit images by removing or adding objects from images.

- Virtual reality (VR) and augmented reality (AR): VR and AR experiences can be amplified with SAM by segmenting objects in the real world, allowing developers to design realistic pass-through visualizations.

- Robotics: SAM can be used to help robots interact with the environment. This can be done by segmenting the objects in the environment and using that information to plan the robot's movements.

- Medical image segmentation: SAM is already being tested to segment objects in medical images, such as tumors, organs, and blood vessels. This can be used to help doctors to speed up diagnosis and increase the accuracy of first impressions.

SAMl is a powerful tool that can be used for a variety of applications. If you are looking for a way to segment objects in images, SAM is a great option to consider. However, it pays to keep in mind key things about SAM

- High computational complexity: SAM is a computationally expensive model, so it may not be suitable for all applications or you may have to tinker with the model to get it to work for your particular dataset and application

- Low Interpretability: SAM is not a very interpretable model, which means that it can be difficult to understand how the model makes some of its predictions. This is a challenge for users and developers who need to understand why the model is making certain predictions.

To get started, you can download the model and run it natively on your computer.

However, if you want to get the most out of the model without worrying about details like running the latest version of the model, optimizing the cost, and perfecting outputs you can sign up on our free-to-use cloud service here.

We provide a free-to-use cloud service that allows you to use SAM and 30 other ML models without having to worry about the installation or maintenance of the model.