Understanding Dreambooth LoRA Fine-tuning Parameters

In this blog post, we delve into the training parameters that are crucial for effectively fine-tuning with the Dreambooth LoRA pipeline. By understanding these parameters, you can make adjustments tailored to your specific requirements, optimizing your results.

Segmind's Dreambooth LoRA pipeline, designed for fine-tuning the SDXL model, enables personalized image generation. This blog post explores the training parameters integral to the fine-tuning process. With a detailed guide already provided for fine-tuning SDXL with Dreambooth LoRA, and a post outlining its use cases, this blog aims to deepen understanding of the parameters that drive optimal fine-tuning outcomes. Although default settings are optimized for best results, gaining insight into these parameters allows for tailored adjustments to suit specific needs.

Resolution

The resolution of the input images is set to 1024x1024 pixels. This is a standard size for generating high-quality images. Anything above this is also acceptable as long as the images are in 1:1 aspect ratio, the images will be rescaled to 1024x1024.

Repeats

This parameter controls the number of repeats for training. In the default seetings, it's set to 80, which means that during the training process, the model will iterate over the dataset 80 times to refine the generated images. You can adjust this value within the range of 30 to 120, depending on your specific requirements. A higher number of repeats can lead to more refined results but may also increase training time.

Learning Rate

The learning rate determines how quickly the model adapts during training. The learning rate is a crucial parameter in model training. A higher learning rate speeds up the training process but can cause the model to miss finer details in the data. This trade-off between speed and accuracy is essential to consider when setting the learning rate for training an AI model. The default value is set at 0.0001.

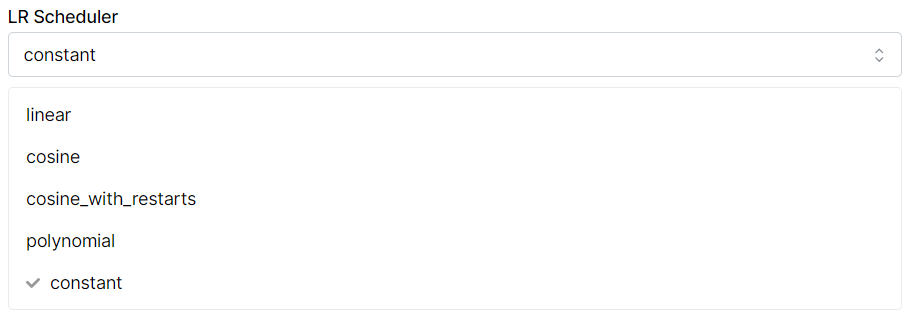

LR Scheduler

In the training process, the LR (Learning Rate) scheduler is set to "constant," meaning the learning rate stays the same throughout. While this setting works well for many scenarios, exploring other scheduling techniques like cosine or linear can further refine the training. These alternative methods adjust the learning rate dynamically, potentially improving model performance by allowing faster learning initially and more detailed focus later in the training. Initially, a higher learning rate is beneficial for the model to quickly grasp the main features of the dataset. Then, gradually reducing the learning rate allows the model to focus on finer details, enhancing overall accuracy and performance. This approach balances the need for speed in the early stages of learning with the precision required in later stages.

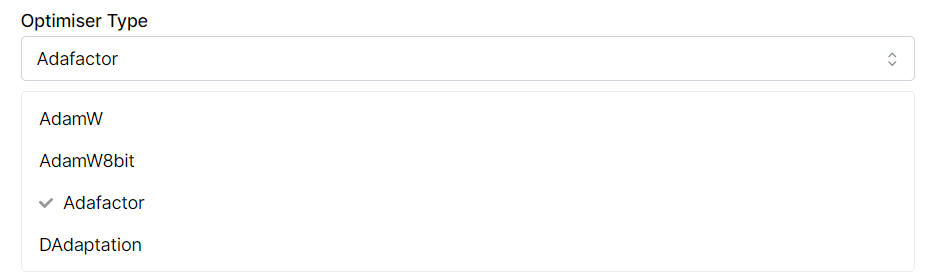

Optimizer Type

The optimizer is a key setting that determines how the model weights are updated during training. Different optimizers apply various strategies and algorithms to adjust these weights effectively. The choice of optimizer affects the training's efficiency, the speed of convergence, and the overall performance of the model. Selecting the right optimizer is essential for optimizing the training process and achieving the desired outcomes from the model. Adafactor is the chosen optimizer for this pipeline. You may experiment with other optimizers like Adam to see if they yield better results for your specific dataset.

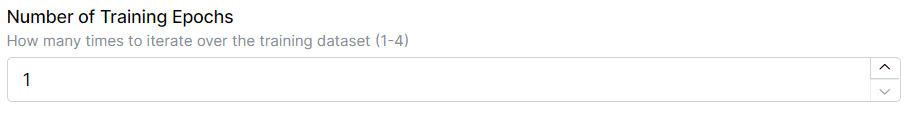

Number of Training Epochs

Training epochs in LoRA dictate how many times the model processes the entire dataset. One epoch means the model goes through the entire dataset once. If your dataset consists of 50 images, and you want the model to learn from each image 10 times within an epoch, that's 500 individual training sessions (50 images x 10 times each). Doubling the epochs to two means this entire process is repeated, totaling 1,000 training sessions. The choice of epochs depends on the complexity of the task and the desired depth of learning. The default number of training epochs is set at 1, which means the model will iterate over the training dataset just once. Depending on the dataset and results, you can consider increasing the number of training epochs to allow the model to learn more effectively.

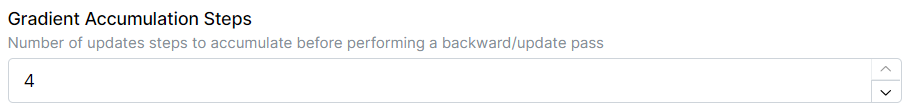

Gradient Accumulation Steps

Gradient accumulation steps in LoRA refer to the number of update steps the model accumulates before performing a backward or update pass. Essentially, it controls how much data the model processes before updating its weights. For example, the default setting is 4, which means the model processes four batches of data, accumulates the gradients from all these batches, and then updates the weights. This approach can speed up training since more data is processed before each weight update, but it also increases memory usage.

LoRA Rank

The LoRA rank influences the complexity of the model's adjustments during fine-tuning. A lower rank leads to a simpler and more efficient model adjustment, but with less precision it can decrease the model's capacity to capture specific task information, potentially affecting adaptation quality. On the otherhand, a higher rank increases the precision of the adjustments but requires more computational resources. LoRA rank also influences the model file size. A higher LoRA rank could potentially increase the model's file size due to more complex weight adjustments, whereas a lower rank might maintain a smaller size. The right LoRA rank balances precision and computational resources based on the specific task and model requirements. A LoRA rank of 128 is set as the default value.

Noise Offset

Using Offset Noise during training can notably enhance the contrast in the images generated by the model. This enhancement becomes particularly evident in images with extreme lighting conditions, such as very dark or very bright environments. The introduction of Offset Noise during the training phase allows the model to better handle these challenging lighting scenarios, leading to improved image quality and more visually striking results. While Offset Noise can enhance image quality in dark and bright conditions, its impact extends to other scenarios as well. Its use might necessitate adjustments in your prompts to optimize the outputs, as it can affect the overall model behavior. This potential for broad impact is the primary reason why Offset Noise is set to 0 by default, offering a neutral starting point for fine-tuning. You can adjust this value within the range of 0 to 0.5 to introduce controlled noise into the training process, potentially leading to more diverse image outputs.

Max Gradient Norm

This parameter is set to 0, indicating that there is no constraint on the maximum gradient norm during training. You can adjust this value to control the magnitude of gradients and stabilize training if necessary.

Weight Decay

Weight decay is a technique used in model training to reduce the influence of less important features and prevent overfitting. It works by slightly altering the model's weights, adding a small amount of noise. This adjustment helps the model to not overly rely on the training data, encouraging it to prioritize more significant features for the predictive task. The process essentially acts as a regularizing factor, improving the model's ability to generalize to new data. The default value is 0.01.

Relative Step

The relative step parameter is not specified in the default settings, but it can be used to control the relative step size during training. It's an advanced setting that can be adjusted based on specific requirements.

Center Crop

A center crop is not specified in the default settings, but it's an option to consider if you want to focus on a specific region of your input images during training. This can be useful if you have detailed features in the center of your images that you want the model to pay more attention to.

Recommended settings for Dreambooth LoRA finetuning

The default settings in Segmind's Dreambooth LoRA pipeline are fine-tuned to deliver the best results for fine-tuning SDXL. While it's great to understand and experiment with the training parameters, sticking to these optimized default settings is recommended for most users, especially if you're new to this process. They offer a solid foundation for generating high-quality images with minimal fuss.

| Parameter Name | Significance | Default Value |

|---|---|---|

| Resolution | Defines image size and quality; higher resolution for more detailed images. | 1024x1024 px |

| Repeats | Determines how many times the model will go through the dataset for training. | 80 |

| Learning Rate | Influences the speed and quality of learning; crucial for model's adaptation. | 0.0001 |

| LR Scheduler | Dictates how the learning rate changes over time; affects convergence speed. | Constant |

| Optimizer Type | Selects the algorithm for optimizing the learning process. | Adafactor |

| Number of Training Epochs | Sets how many complete passes the model makes over the entire dataset. | 1 |

| Gradient Accumulation Steps | Balances between training efficiency and memory usage; higher value for larger batches. | 4 |

| LoRA Rank | Determines the complexity of model adjustment; affects efficiency and precision. | 128 |

| Noise Offset | Adds variability to the training process; can help in generalization. | 0 |

| Max Gradient Norm | Prevents excessively large updates to weights, ensuring stable training. | 0 |

| Weight Decay | Reduces overfitting by penalizing large weights; improves model generalization. | 0.01 |