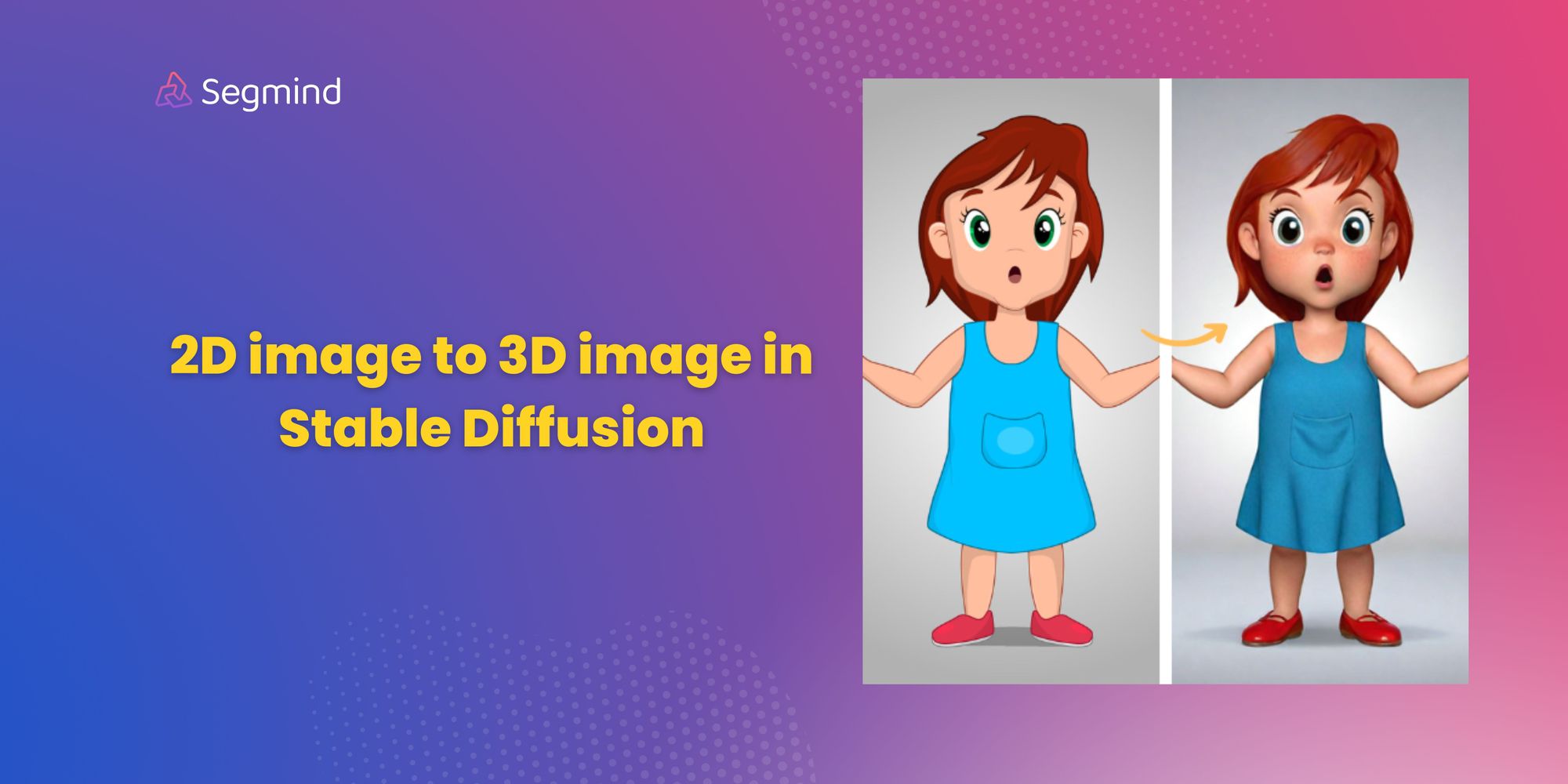

Convert 2D image to 3D model in Stable Diffusion with Fooocus

This blog post guides you through transforming 2D images into 3D models in Stable diffusion using Fooocus.

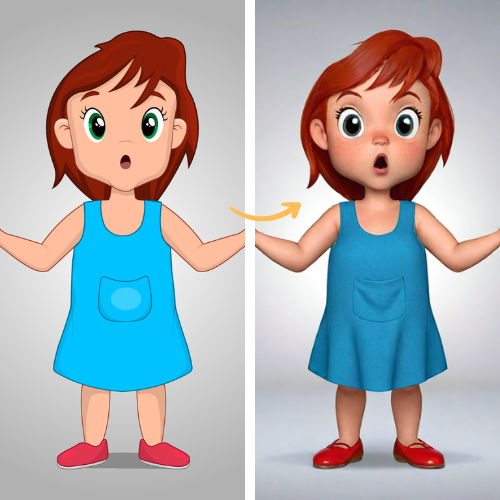

We’re going to tackle a problem that many artists, designers often encounter: transforming a 2D image into a 3D model. This is particularly challenging when dealing with images of cartoons or anime characters, which are typically flat and lack the depth information necessary for 3D modeling.

Traditionally, this process would require complex tools and a deep understanding of 3D modeling techniques. But what if you could bypass all that complexity and convert your 2D image into a 3D model with just a few clicks?

That’s where Fooocus comes in. Fooocus simplifies the process of converting 2D images into 3D models. In this blog, we will guide you through the workflow of using Fooocus to transform your 2D images into 3D models.

Under the Hood of 2D to 3D workflow

Lets take a closer look at the workflow and understand the steps involved.

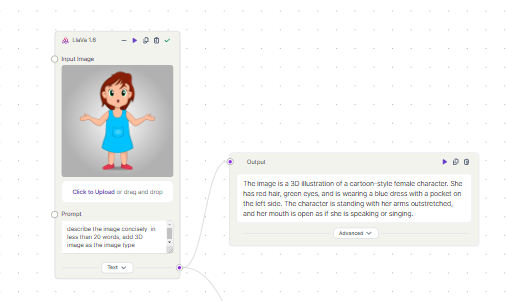

- Generating Text Prompts with Llava: The first step involves using a image to text model like Llava, which simplifies the task of generating accurate text prompts that describe the image or the character in the image. Instead of manually figuring out the prompt, Llava uses the image as an input to provide a precise textual description or prompt that will be used in Fooocus.

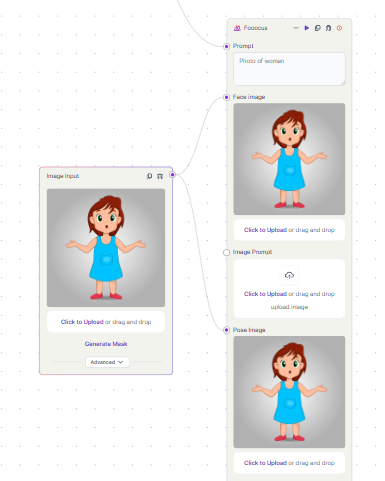

2. Face Image Input: The next step is to input the image into the face image input in Fooocus. This process involves a technique similar to Faceswap, which is akin to an IP adapter face and is one of the control methods in Fooocus. It essentially replicates the face from the reference image, providing a detailed facial structure for the 3D image.

3. Pose Image Input: Alongside the face image, the image is also input into the pose image input in Fooocus, another control method in Fooocus. This utilizes Pyracanny, which is comparable to ControlNet Canny. This tool identifies the boundaries of objects within images, thereby preserving the composition of the image through its edges and providing a framework for the pose of the 3D image.

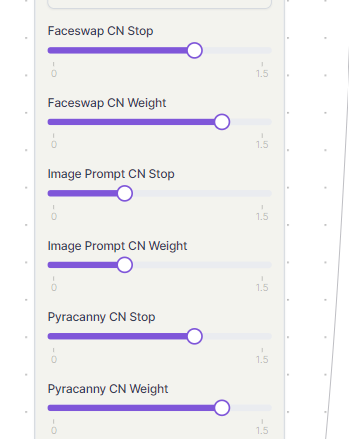

Influence of Control Methods: The original image is influenced by control methods like Faceswap and Pyracanny. These can found in the advanced settings of the Fooocus model. The extent of this influence is determined by two settings:

- Stop At: This setting determines the duration of the control method’s influence. It stops the control at certain sampling steps. For instance, a value of 0.5 implies stopping after 15 steps for 30 sampling steps which is 50% of the sampling steps.

- Weight: This setting determines the degree of influence the control method has on the original image. A higher weight means a greater influence.

Model/Checkpoint: Use the ProtoVision Lightning SDXL. This model specializes in the production of High Fidelity 3D, Photorealism, Anime, and hyperrealism images. It will help in creating a detailed and realistic 3D model from the 2D image.

Recommended Settings for Control Methods

By setting these controls high, we can ensure that the face and overall composition from the original image are accurately captured in the 3D model. This will result in a more realistic and detailed 3D representation.

Faceswap Settings:

- Faceswap CN Stop at: Set this to 1. This means the influence of the Faceswap control method will stop after 100% of the sampling steps.

- Faceswap CN Weight: Set this to 1.2. This means the Faceswap control method will have a strong influence on the original image, helping to accurately replicate the face from the reference image.

Pyracanny Settings:

- Pyracanny CN Stop at: Set this to 1. This means the influence of the Pyracanny control method will stop after 100% of the sampling steps.

- Pyracanny CN Weight: Set this to 1.2. This means the Pyracanny control method will have a strong influence on the original image, helping to accurately identify the boundaries of objects within the image.

You can access this workflow for free on Segmind’s Pixelflow, which is a no-code, cloud-based node interface tool where generative AI workflows can be effortlessly built.

Conclusion

In conclusion, Fooocus offers a streamlined solution for transforming 2D images into detailed 3D models, particularly beneficial for artists and enthusiasts. By integrating advanced control techniques like Faceswap and Pyracanny, Fooocus ensures accurate replication of facial features and overall composition from the original image. With recommended settings optimizing Faceswap and Pyracanny controls, users can achieve realistic and detailed 3D representations effortlessly.