How to Unlock Image Depth Control with ControlNet

ControlNet Depth offers users the unique ability to enhance, edit, and elaborate the depth information of a photograph.

The ControlNet Depth model is designed to use depth information to control the image outputs of diffusion models. Often used alongside Stable Diffusion, ControlNet Depth is a text-to-image generation model that focuses on depth information and estimation. Its unique training, conditioning and approach open up new opportunities in the fields of both image generation and manipulation.

ControlNet Depth: Origins

The origins of the ControlNet Depth model can be traced back to Lvmin Zhang and Maneesh Agrawala's iconic paper 'Adding Conditional Control to Text-to-Image Diffusion Models'. In their groundbreaking research, the authors first introduce 'ControlNet', a neural network model for controlling Stable Diffusion models.

How does ControlNet work?

ControlNet enhances diffusion models through the introduction of conditional inputs like edge maps, segmentation maps, and keypoints. Inherently flexible, ControlNet can be used along with any Stable Diffusion model.

Its learning process is remarkably robust, establishing it as a practical and versatile solution for different applications, even with limited training datasets. Additionally, you can train the model on smaller personal devices or scale it up with powerful computation clusters to handle large amounts of data.

Through ControlNet, users can condition models with different spatial contexts like segmentation maps, scribbles, keypoints, checkpoints etc.

How does ControlNet Depth work?

The technical proficiency of ControlNet Depth lies in the original ControlNet model. ControlNet Depth operates by blending Stable Diffusion models with the ControlNet architecture.

The Stable Diffusion model functions as the foundation and is responsible for the efficient generation of high-quality images. The ControlNet layer handles the conditional inputs or checkpoints and augments the Stable Diffusion model to integrate depth information.

The ControlNet layer transforms these checkpoints into a depth map, which is then fed into the ControlNet Depth model, along with a text prompt. Therefore, for ControlNet Depth, the checkpoints on the depth map correspond to the ControlNet conditioned on Depth estimation. The model processes this data and incorporates the provided depth details and specified features to generate a new image.

ControlNet Depth Model Training

The ControlNet Depth model is trained on 3M depth images, caption pairs. The depth images were generated with Midas. The model was trained for a total of 500 GPU hours with Nvidia A100 80G and Stable Diffusion 1.5 as a base model.

Advantages of ControlNet Depth

ControlNet Depth generates images with a stunning sense of depth and realism that blow traditional image-generation techniques out of the water. Its advantages are distinct and evidenced by its diverse applications.

- Creative Control: With ControlNet Depth, users are able to specify desired features in image outputs with unparalleled precision, unlocking greater flexibility for creative processes. The extra dimension of depth that can be added to ControlNet Depth generated images is a truly remarkable feat in Generative AI.

- Richer Visuals: In order to generate an image, the ControlNet Depth model processes data that aligns with the specified features and text prompt, then incorporates the given depth details into the final output for more detailed and compelling visuals.

- Realistic Image Generation: The addition of depth information generates more natural and vivid images. By enabling extra conditional inputs and checkpoints relating to Depth information and estimation, the results are more immersive image outputs, ideal for interactive technology.

Applications of ControlNet Depth

By leveraging the power of conditional control, the model holds great potential for creatives, researchers, and developers. Through the integration of depth information, ControlNet Depth takes image manipulation to the next level.

- Create images with specific features: Define and control specific or particular features like poses, stylizations, objects, and of course, depth information.

- Improve image quality: Remove noise, add finer details, restore damaged images, incorporate depth information, create more realistic visuals... ControlNet Depth allows for an extraordinary level of image enhancement techniques.

- Greater output control: Control the output of Stable Diffusion models to include specific features, as well as depth information with ControlNet Depth. Extremely useful to create images following a particular style or consistent with a given dataset.

- Data Augmentation: ControlNet Depth can be used by researchers and data scientists for data augmentation, or to generate datasets with varying depth information for machine learning tasks.

- Computer Vision, VR and Robotics: Depth information and control is absolutely critical for perception and objection recognition. As a model focused on depth information and estimation, ControlNet Depth capabilities are gamechangers in the fields of VR, Robotics and Computer Vision.

Get started with ControlNet Depth

Running the ControlNet Depth model locally with the necessary dependencies can be computationally exhaustive and time-consuming. That’s why we have created free-to-use AI models like ControlNet Depth and 30+ other models.

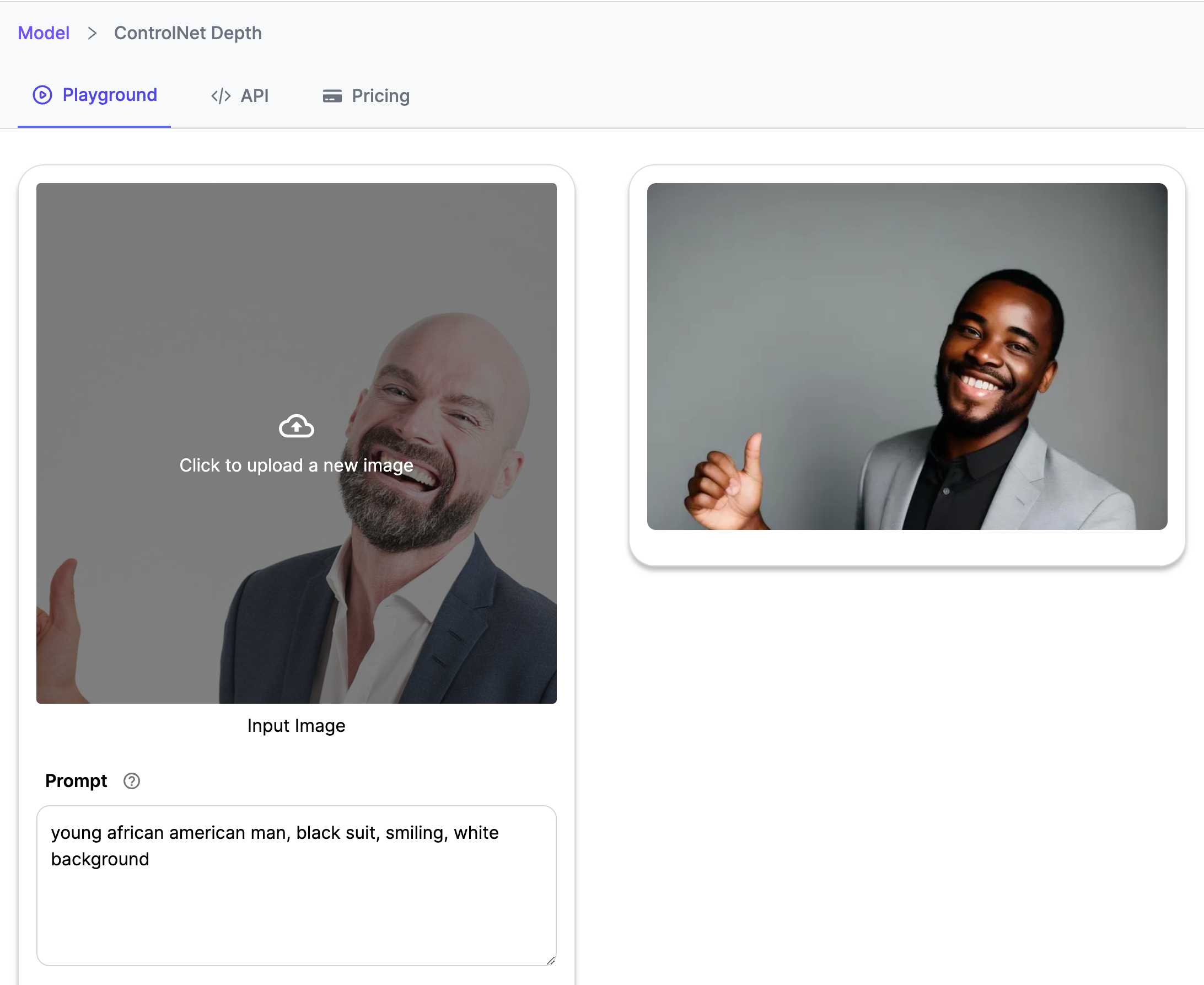

To get started for free, follow the steps below

- Create your free account on Segmind.com

- Once you’ve signed in, click on the ‘Models’ tab and select ‘ControlNet Depth'

- Upload the image with the pose you want to replicate

- Enter a text prompt and specify any instructions for the content style and depth information

- Click ‘Generate’

- Witness the magic of ControlNet Depth in action!

Whether you're an artist or a seasoned AI professional, the ControlNet Depth models give you the unique opportunity to bring your creative vision to life with exceptional realism and depth.

ControlNet Depth Model License

The ControlNet Depth model is licensed under the Creative ML OpenRAIL-M license, a form of Responsible AI License (RAIL), which promotes the open, responsible, and ethical use of the model. Under this license, while users retain the rights to their generated images and are free to use them commercially, it is important to adhere to the terms and conditions outlined in the license. The license prohibits certain use cases, including harmful or malicious activities, exploitation, and discrimination. For your modifications, you may add your own copyright statement, and provide additional or different license terms.

Do remember you are accountable for the output you generate using the model, and no use of the output can contravene any provision as stated in the CreativeML Open RAIL-M license.