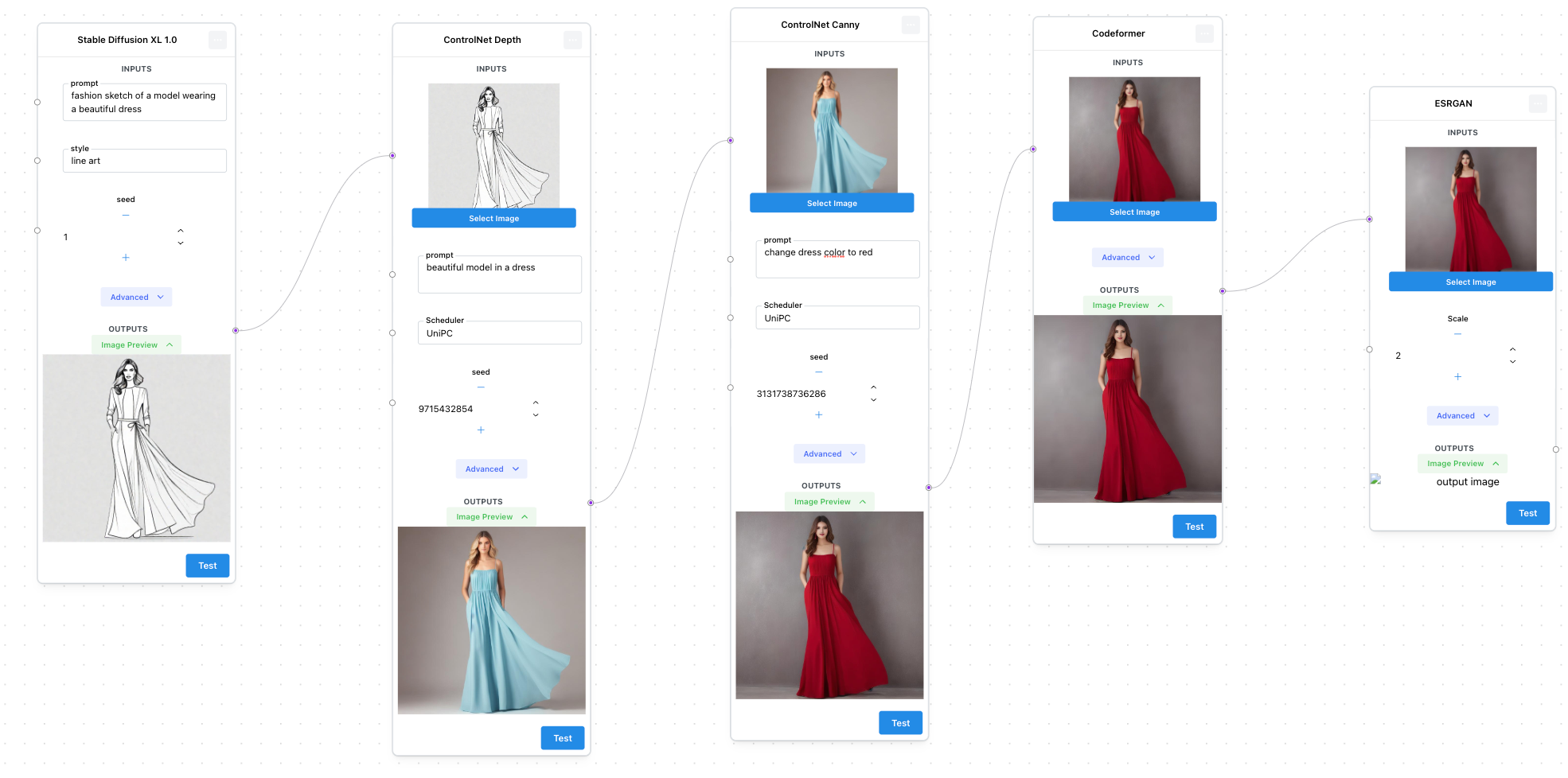

The Sketch to Image Workflow: Using Generative AI to go from sketch to visualizations in seconds

This article delves into the Sketch to Image workflow using multiple Segmind models for different use cases as well as real world applications.

Today, the era of painstakingly transforming sketches into visualizations through time and resource investments has become a thing of the past. Whether you're envisioning a fashionable dress or mapping out the architectural design of a contemporary villa, the power of stable diffusion has revolutionized the art of sketch transformation. With its capabilities, you can effortlessly breathe life into your sketches, seamlessly convert them into stunning visualizations, and produce a diverse array of captivating images, perfect for sparking creativity, illustrating concepts, and enhancing your catalog prints.

This article going to provide you with a guide to building a workflow for creating/ideating a sketch, turning the sketch into a realistic visualization that can be used in a product catalog, posters, and magazines.

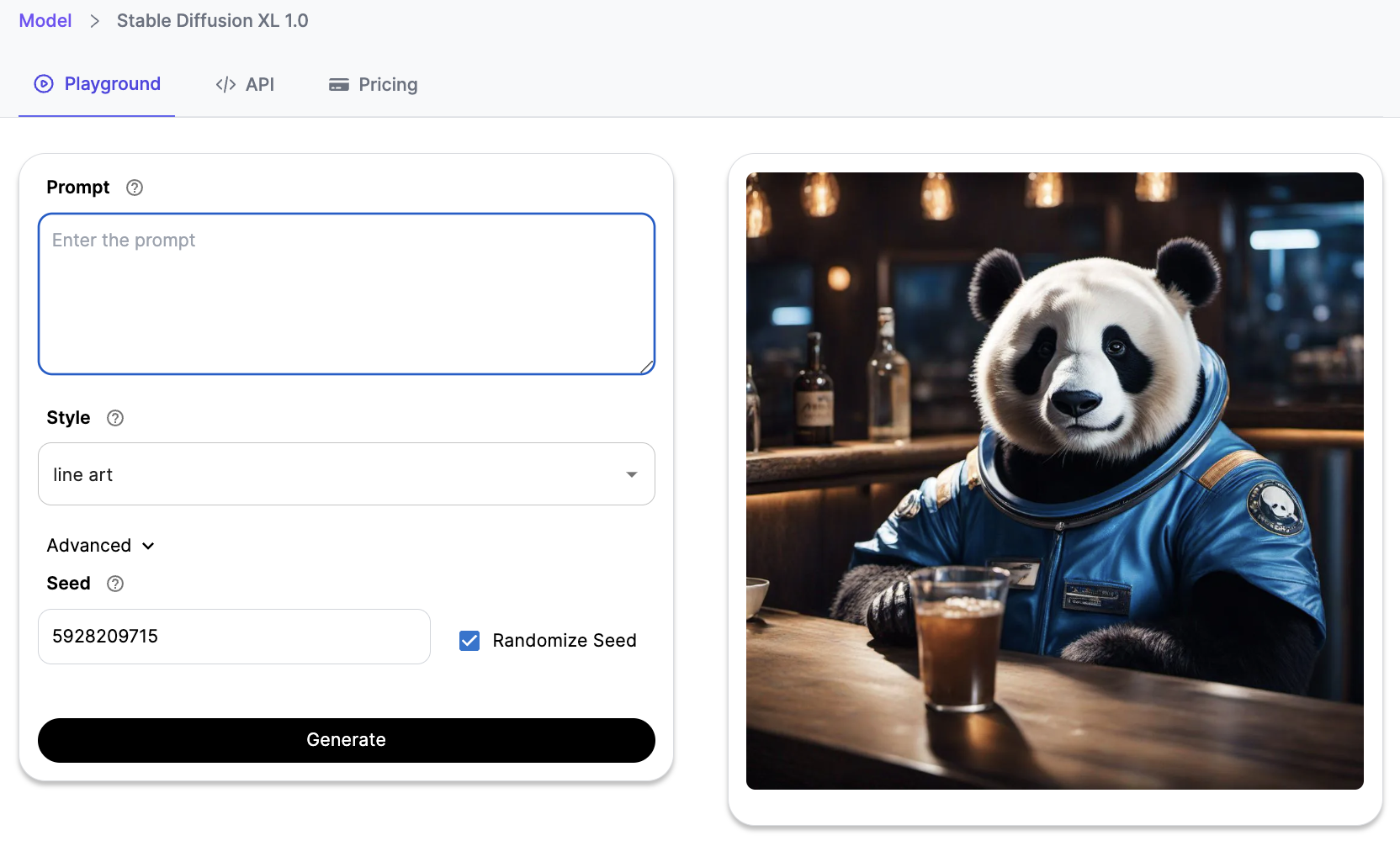

Creating a Mockup Sketch from SDXL 1.0

Firstly, we'll need a sketch. We can either use an existing sketch in PNG/JPEG format and send it as an input image on Segmind's ControlNet Depth model, or the sketch itself can be generated using SDXL 1.0's "line art" style. Simply change the style from "base" to "line art" and describe the dress style.

Converting Sketch to real-world visualization

The sketch serves as input for the ControlNet Depth model. Alongside this input image, a prompt is provided to instruct the model in generating the desired images. This prompts the user to articulate specific expectations for the resulting image, such as specifying the dress color and describing the desired ethnicity of the model. This process ensures a highly personalized and tailored outcome, catering to the user's aesthetic preferences and creative vision.

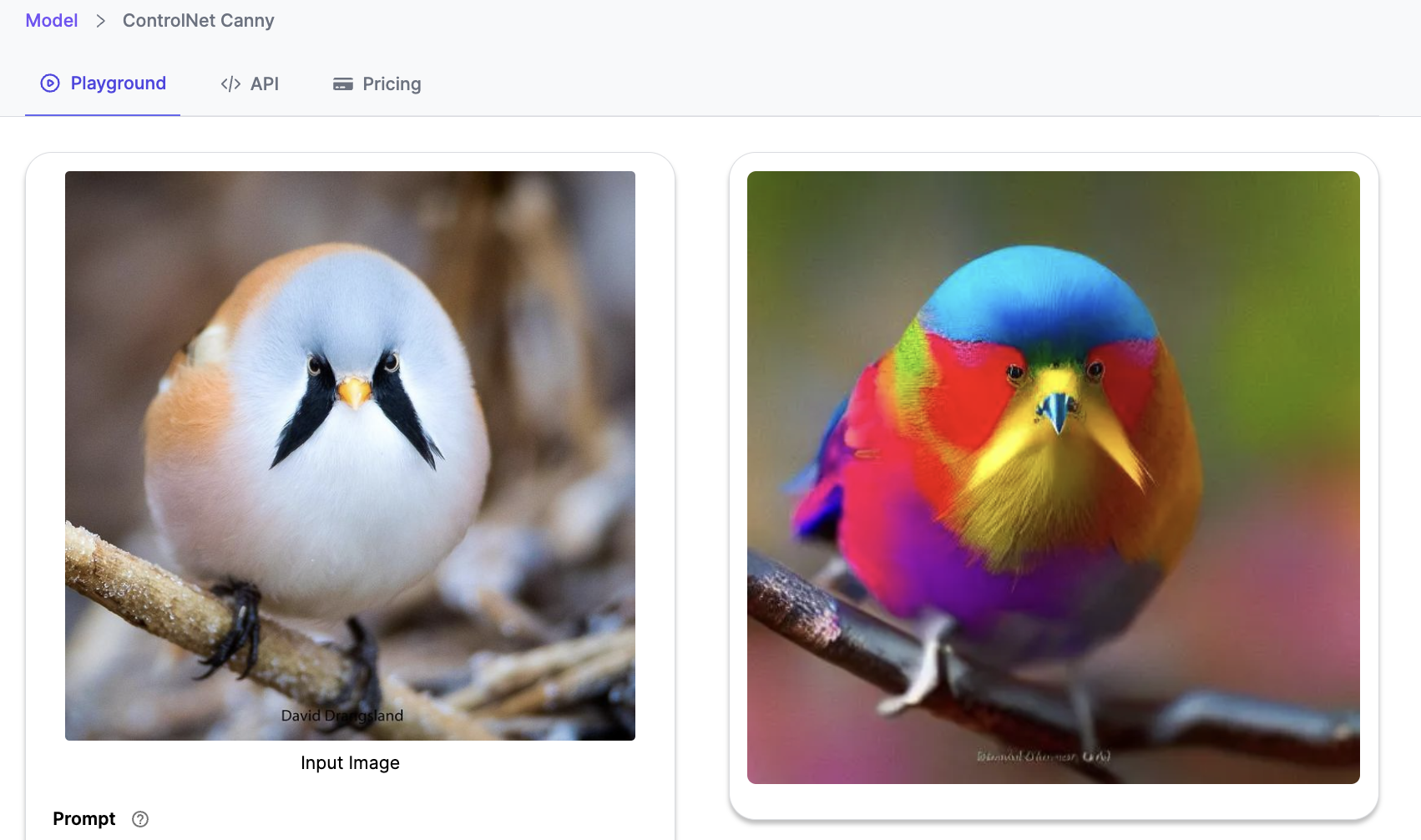

Generating variations of the visualization

The next step involves harnessing the capabilities of the Segmind ControlNet Canny model to generate a diverse array of image variations. To accomplish this, we should begin by uploading the image generated through the ControlNet Depth model from the previous step in the workflow.

Next, we need to formulate a precise prompt that conveys the specific alterations or enhancements we wish to see in the generated image. This prompt should be tailored to spell out the modifications we desire in terms of color, texture, shapes, or any other visual aspects that need attention.

In essence, by leveraging the ControlNet Canny model, we are not only fine-tuning the existing image but also orchestrating a transformation that aligns with our vision. This approach ensures that the generated variations meet our evolving requirements, opening up a realm of possibilities for image manipulation.

Editing and upscaling the image

Inconsistencies and defects in any of the generated images can be cleaned and edited using the cleanup tool from Clipdrop. Whether you're dealing with minor blemishes, unwanted artifacts, or more substantial defects, the cleanup tool from Clipdrop empowers you to transform your images into flawless works of art.

The image can then be upscaled using the ESRGAN (Enhanced Super-Resolution Generative Adversarial Network) model. ESRGAN is designed to increase the resolution of images while preserving important details. This is particularly valuable for generated images that may have originally been produced at a lower resolution.

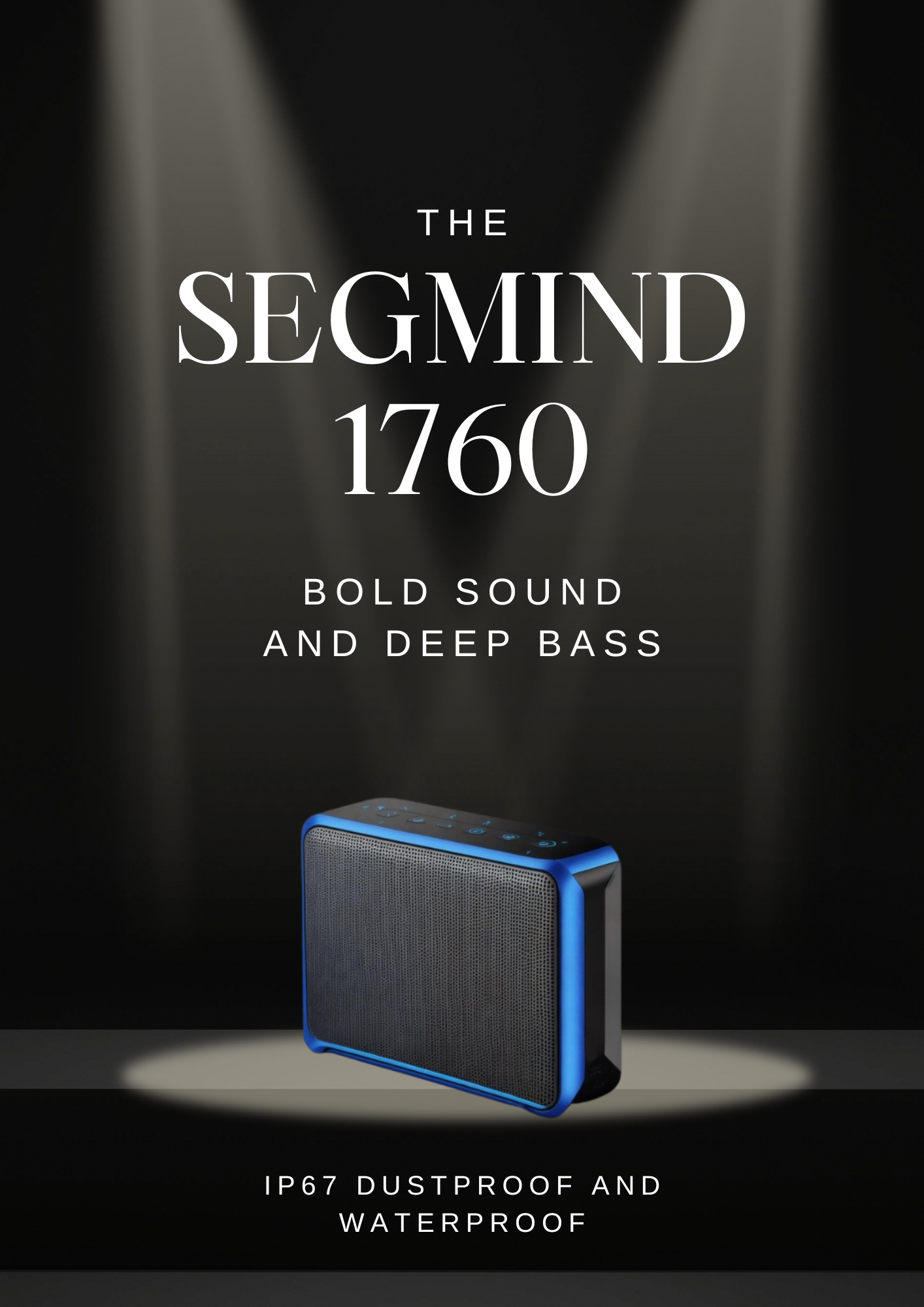

Applications of the sketch to image workflow

- Product Catalogue Design- Images produced through this workflow can serve as the foundation for creating captivating product catalogs, eye-catching banners, or striking posters. Drawing inspiration from Canva's poster designs, we can seamlessly integrate creative elements into the final product. The background-removed generated images can be skillfully employed to craft compelling and visually appealing product catalogs or posters.

2. Magazine cover design- The generations can be used in the design of magazine covers and illustrations. The background of the image can be removed using the Background removal model and the output image which is just the model in the dress can be used on a magazine cover.

3. Design Ideation- A particular sketch can be instantly visualized in multiple variations using the workflow. A change in prompt/seed will generate different variants of the image.

In Conclusion

The era of transforming sketches into visualizations is now a thing of the past, thanks to the remarkable capabilities of stable diffusion. This article has provided a comprehensive guide to building a streamlined workflow for transforming sketches into realistic visualizations that can elevate your creative projects and product presentations.

From product catalog design to magazine covers and design ideation, this workflow opens up a world of possibilities for your creative projects. Whether you're looking to spark creativity, illustrate concepts, or enhance your catalog prints, this innovative approach revolutionizes the art of sketch transformation, making it easier and more accessible than ever before. With the power of stable diffusion, watch your sketches come to life in stunning visualizations that captivate and inspire.

Coming Soon...

With Segmind's upcoming product, you can set up the entire workflow in one webpage and run the generations with the click of a button. Subscribe and sign up for the latest updates from Segmind.