Generating Text Descriptions and Captions From Images with LLaVa

Multimodal generative models like LLaVa generate rich, detailed descriptions and captions for images. Learn how LLaVa combines text and image to generate a sequence of words.

While image classification assigns labels to images (think "cat," "car," "mountain"), image captioning and text generation from images utilizes multimodal learning, where it combines different types of information, like images and text. This allows it to generate rich descriptions or captions that capture the essence of an image.

This blog post will explore the exciting world of multimodal generative models, specifically how models like LLaVa can be used to generate detailed text descriptions and captions from images.

What is Large Language and Vision Assistant (or) LLaVa?

The LLava model, also known as the Large Language and Vision Assistant is a multimodal vision model that uses advanced transformers to make sense of images and create descriptions for them. Here’s a how it works in simple terms: first, a vision encoder analyzes the input image to identify key visual elements. Next, a transformer-based language model takes the text prompt for the input image and deciphers it for context. Then, it combines this image and text understanding, translating this combined knowledge into a sequence of words, forming the text or caption.

How to use LLaVa for generating text descriptions and captions from images?.

The LLaVa model excels in generating detailed descriptions for images and answering image-related questions. It outshines other models like img2prompt and CLIP Interrogator in creating detailed prompts from images. Let’s explore three practical applications or processes where we utilize LLaVa for generating text from images.

a. Image captioning: LLaVa analyzes an image and generate a concise, natural language description that captures the content and context within it. Generate accurate alt text automatically, saving you time and improving accessibility.

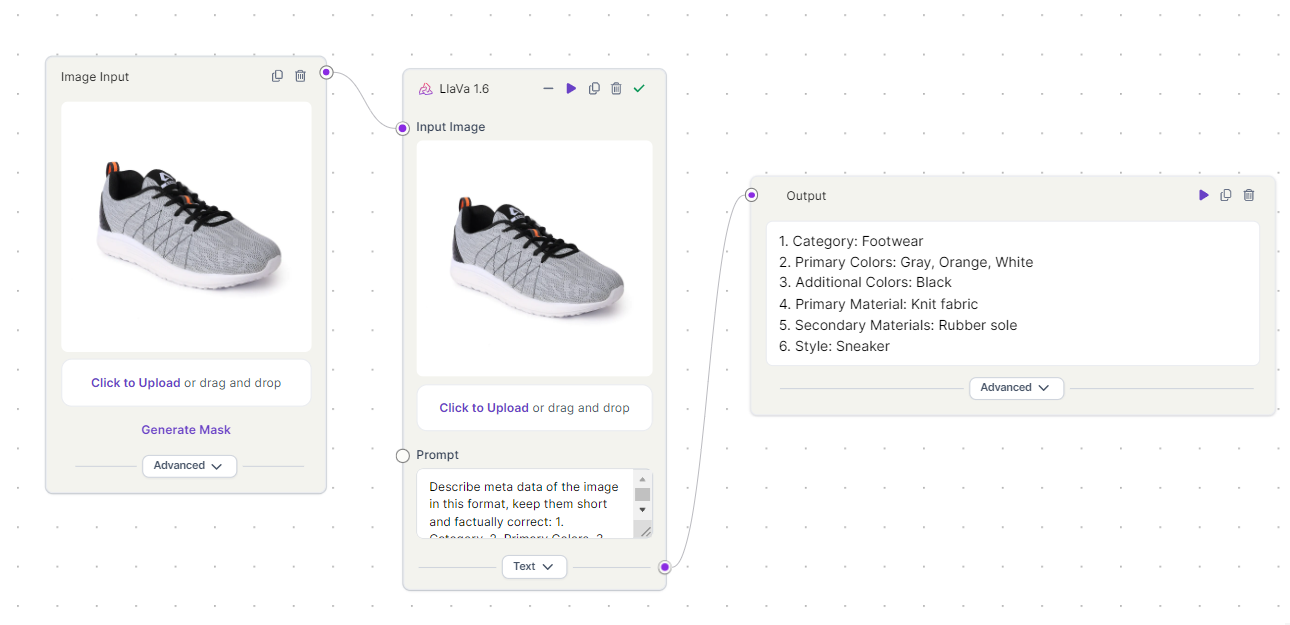

Here is a workflow which generates the metadata of the product with all relevant tags. A use case in e-commerce industry which needs. The model takes the input image of a running shoes and generate metadata based on the text prompt.

Prompt: "Describe meta data of the image in this format, keep them short and factually correct: 1. Category, 2. Primary Colors, 3. Additional Colors, 4. Primary Material, 5. Secondary Materials, 6.Style"

Text Output Generated: 1. Category: Footwear 2. Primary Colors: Gray, Orange, White 3. Additional Colors: Black 4. Primary Material: Knit fabric 5. Secondary Materials: Rubber sole 6. Style: Sneaker

b. Visual question answering: LLaVa bridges the gap between image and language, allowing you to ask questions directly about your images and receive natural language answers. Helps in understand the relationships and interactions within an image.

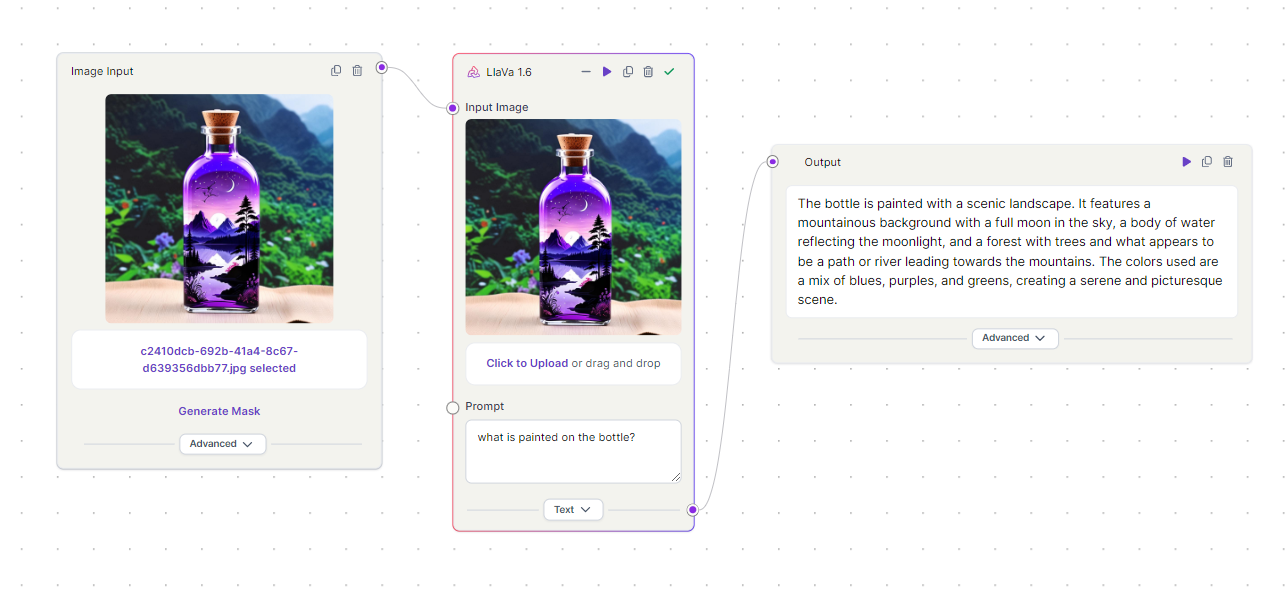

In the workflow below, we ask LLaVa to describe the painting on the glass bottle image. The model accurately describes the painting in fine detail.

"The bottle is painted with a scenic landscape. It features a mountainous background with a full moon in the sky, a body of water reflecting the moonlight, and a forest with trees and what appears to be a path or river leading towards the mountains. The colors used are a mix of blues, purples, and greens, creating a serene and picturesque scene"

c. Text prompt generation: LLaVa analyzes an image and create a written prompt that captures its style and content. These prompts can be used as inputs for image-to-image or text-to-image models, ensuring the generated image reflects the content and style of your chosen image.

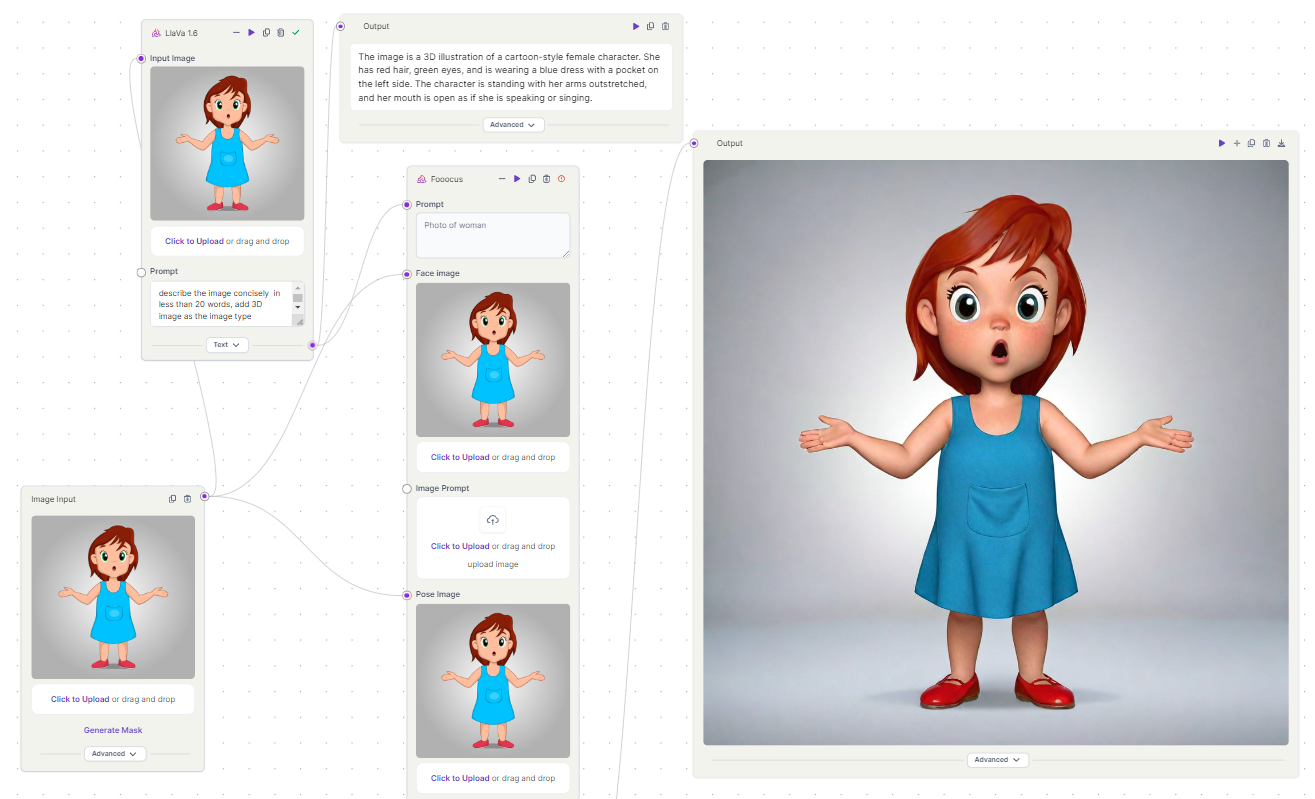

In the below workflow, which takes a 2D input image and converts into a 3D model. This workflow has LLaVa and Fooocus models. LLaVa simplifies the task of generating accurate text prompts that describe the image or the character in the image. Instead of manually figuring out the prompt, LLaVa uses the image as an input to provide a precise textual description or prompt that in then used as text prompt in the Fooocus model.

Text Prompt: "Describe the image concisely in less than 20 words, add 3D image as the image type"

Text Output: "The image is a 3D illustration of a cartoon-style female character. She has red hair, green eyes, and is wearing a blue dress with a pocket on the left side. The character is standing with her arms outstretched, and her mouth is open as if she is speaking or singing"

Access the LLaVa model on Segmind and try the above workflows on Pixelflow to generate accurate text automatically and saving you time.