Live Portrait: Animate Your Portrait Photos

Live Portrait uses AI to animate static images with realistic expressions and movements, offering more control and efficiency than diffusion-based methods. Try Live Portrait today and create captivating animated portraits!

Live Portrait animates static images using implicit key point based framework, bringing a portrait to life with realistic expressions and movements. Live Portrait model identifies key points on the face (think eyes, nose, mouth) and manipulates them to create expressions and movements. This method offers advantages:

- More Control: Live Portrait gives you more control over the animation. You can fine-tune how the face moves, like blinking or smiling etc.

- More Efficient: The system is faster because it doesn't need to gradually build up the entire animation from scratch.

* Get $0.50 daily free credits on Segmind.

Live Portrait

How Live Portrait Works?

Live Portrait brings portraits to life in four key steps:

- It separates the portrait's key features and identifies points for animation. Motion data is then extracted from the driving video.

- Live Portrait merges the portrait's features with the driving video's motion to create animation-ready key points.

- Specialized modules (Stitching & Retargeting) ensure smooth transitions between facial features and allow fine-tuning of eye and lip movements for realistic expressions.

- Finally, a warping network and decoder combine everything to generate the final animated frame, efficiently and in high quality.

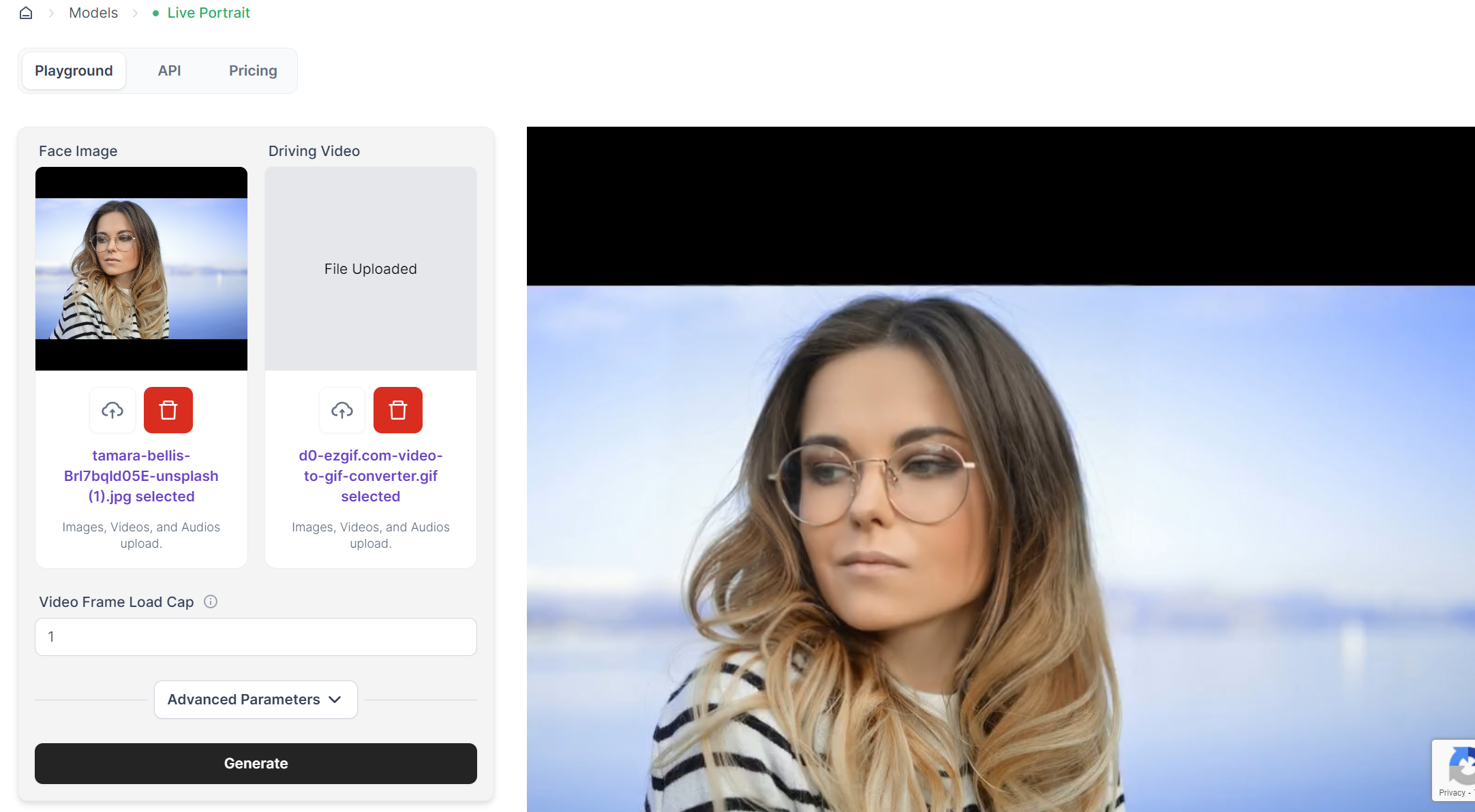

How to Use Live Portrait?

Generating animations from static portrait images is very simple, you can follow the below step:

- Upload Portrait Image: Select and upload a static portrait photo with a clear face. This is a required image containing a face. It serves as the base for the animation and defines the overall appearance of the animated portrait.

- Choose Driving Video: Upload your custom video as a motion source that drives the animation. Live Portrait extracts motion data (facial expressions and head pose) from this video to animate the face image. You can use various video clips as the driving video.

- Generate: Configure desired parameters or keep defaults, then click 'Generate`.

How to Fine-tune Outputs in Live Portrait?

You can also fine-tune your outputs by understanding the following parameters that influence final outputs. Feel free to tweak these parameters till you get outputs to your liking.

Output Control

These parameters controls the size and scale of the generated animation

- live_portrait_dsize: This parameter controls the size (resolution) of the output animation. Higher values result in sharper animations but require more processing power. Valid range: 64 to 2048 pixels.

- live_portrait_scale: This parameter determines the scale of the animated face within the output image. A larger scale value makes the face appear bigger. Valid range: 1 to 4.

Video Processing

These parameters affect how Live Portrait processes the driving video for animation.

- video_frame_load_cap: This parameter sets a limit on the number of frames loaded from the driving video. Setting it to 0 uses all frames, while a higher value reduces the number of frames used (improves efficiency).

- video_select_every_n_frames: This parameter controls how many frames are selected from the driving video. A value of 1 uses all frames, while higher values select every nth frame (reduces temporal resolution).

Animation Adjustments

These parameters allow you to fine-tune the positioning and overall look of the animation.

- live_portrait_lip_zero: This parameter enables or disables "lip zero," a technique that ensures lips remain closed during silence or neutral expressions.

- live_portrait_relative: This parameter enables the use of relative positioning for facial features, leading to more natural animations.

- live_portrait_vx_ratio: This parameter controls the horizontal position of the animated face. Positive values shift it right, and negative values shift it left. Valid range: -1 to 1.

- live_portrait_vy_ratio : This parameter controls the vertical position of the animated face. Positive values move it up, and negative values move it down. Valid range: -1 to 1.

- live_portrait_stitching : This parameter enables stitching, a technique that seamlessly combines different facial features (eyes, nose, mouth) for a cohesive animation.

Retargeting

These parameters control how facial features in the animation are influenced by the driving video.

- live_portrait_eye_retargeting: This parameter enables or disables eye retargeting, which ensures the animated eyes follow natural gaze directions based on the driving video.

- live_portrait_lip_retargeting: This parameter enables or disables lip retargeting, which synchronizes lip movements with speech or expressions in the driving video.

- live_portrait_lip_retargeting_multiplier: This parameter allows you to fine-tune the intensity of lip retargeting. Higher values create more pronounced lip movements. Valid range: 0.01 to 10.

- live_portrait_eyes_retargeting_multiplier: This parameter allows you to control the degree of eye movement realism. Higher values create more dramatic eye movements. Valid range: 0.01 to 10.