Kandinsky 2.1: One of the best text-to-image models, now on Segmind

Kandinsky 2.1 is a state-of-the-art multilingual text-to-image latent diffusion model that harnesses the best practices from its highly regarded predecessors.

In the ever-evolving landscape of AI, a team from Russia has introduced a new and improved diffusion model for image synthesis from text descriptions, Kandinsky 2.1. Stemming from the foundations of Kandinsky 2.0, this innovative generative model encompasses revolutionary improvements in image generation technology, setting new standards in the field.

Kandinsky 2.1 is a SOTA multilingual text-to-image latent diffusion model that harnesses the best practices from its highly regarded predecessors. By leveraging the rich expertise developed in the DALL-E 2 and Latent Diffusion models, Kandinsky 2.1 combines their strengths to offer a truly remarkable and powerful solution for generating images from textual descriptions.

The Need For A New Model

Kandinsky 2.0, introduced in November 2022, marked a significant step in image synthesis through its key feature of multilingualism and the utilization of a dual text encoder. That is, it encodes text prompts with CLIP. Then, it diffuses the CLIP text embeddings into CLIP image embeddings. Finally, it uses image embeddings for image generation.

Yet, in the pursuit of excellence, the research team realized the necessity for further enhancements. Despite the positive feedback, they understood that a step change was required to reach the quality of prominent competing solutions.

The rapidly evolving landscape of generative models, the emergence of models like ControlNet, GigaGAN, GLIGEN, and Instruct Pix2Pix, among others, and the ever-expanding possibilities of their applications inspired them to revisit their architectural plans. The lessons learned from Kandinsky 2.0 led them to experiment further with architecture.

Making Way for Kandinsky 2.1

Kandinsky 2.1 breaks new ground in image synthesis by using a CLIP visual embedding, as well as a text embedding, for generating the latent representation of images. By incorporating the image prior model to create a visual embedding CLIP from a text prompt, we've achieved a leap in image generation quality.

Moreover, the model has been engineered to utilize an image-blending capability. This allows feeding two visual CLIP embeddings into the trained diffusion model to produce a blended image.

Among the key architectural changes is the move from VQGAN generative models to the specifically trained MoVQGAN model. This transformation allows us to derive the utmost value from the visual decoder, significantly improving the effectiveness of image generation.

The Results: A League of its Own

In terms of parameters, Kandinsky 2.1 houses 3.3 billion, including a text encoder, image prior, CLIP image encoder, Latent Diffusion UNet, and MoVQ encoder/decoder. This refined architecture trained on multiple datasets, such as the LAION Improved Aesthetics dataset and the LAION HighRes dataset, exhibits a notable improvement in image synthesis quality.

Furthermore, Kandinsky 2.1 triumphs over many similar solutions on the COCO_30k dataset. According to the Frechet Inception Distance (FID) estimate, which assesses the proximity of two probability distributions, Kandinsky 2.1 has taken a huge leap in image generation quality compared to its predecessor and has landed a confident third place among renowned models.

Conclusions and Future Plans

With the successful launch of Kandinsky 2.1, they have made significant strides in various applied problems of image synthesis, including image mixing, image alteration based on the text description, and image inpainting/outpainting.

Kandinsky's team is looking to expand the capabilities of the new image encoder, strengthen the text encoder, and experiment with higher-resolution image generation. The continual refinement of Kandinsky promises to push the boundaries of what's possible in the field of image synthesis.

Experience Kandinsky 2.1 Today

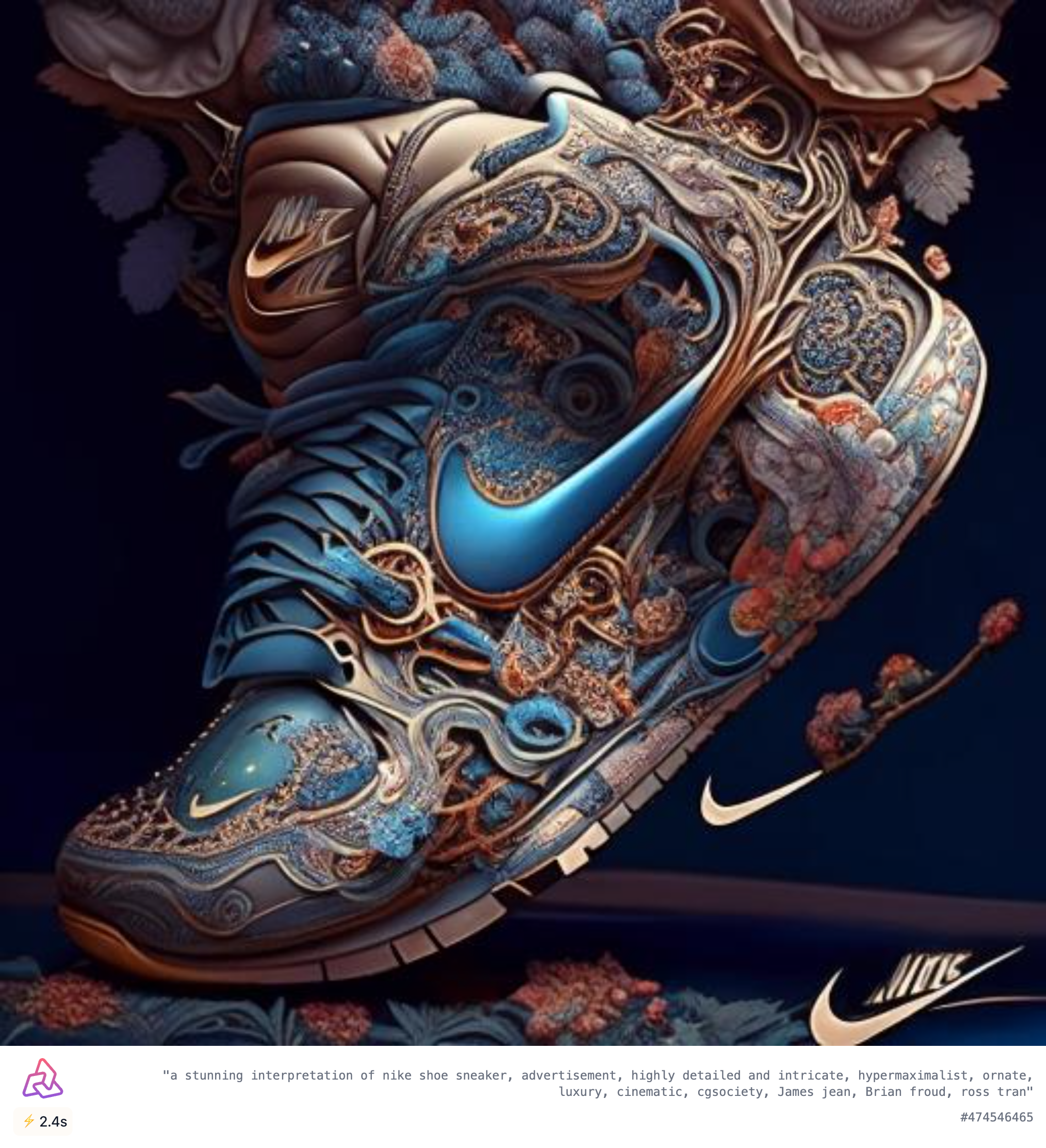

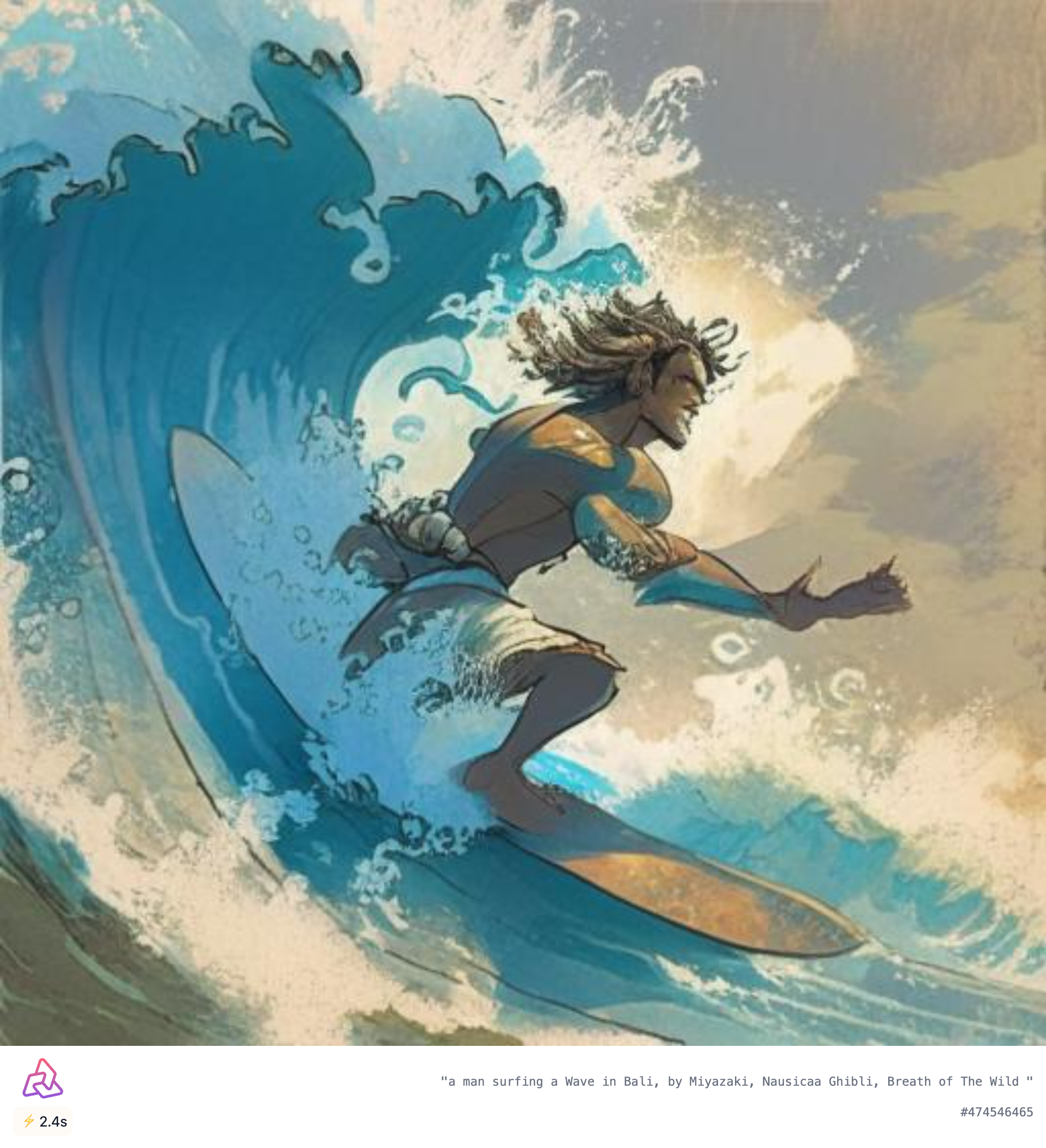

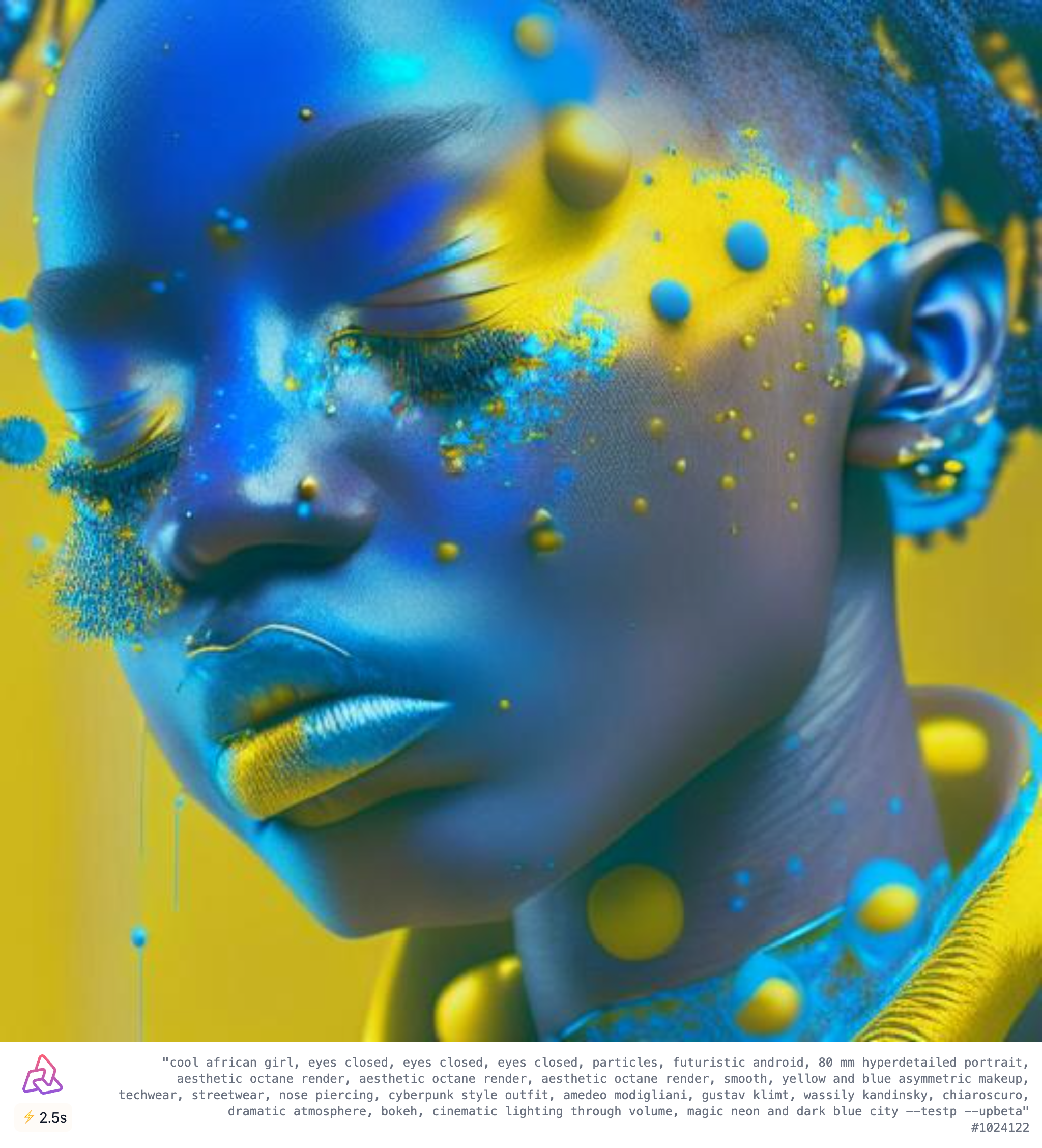

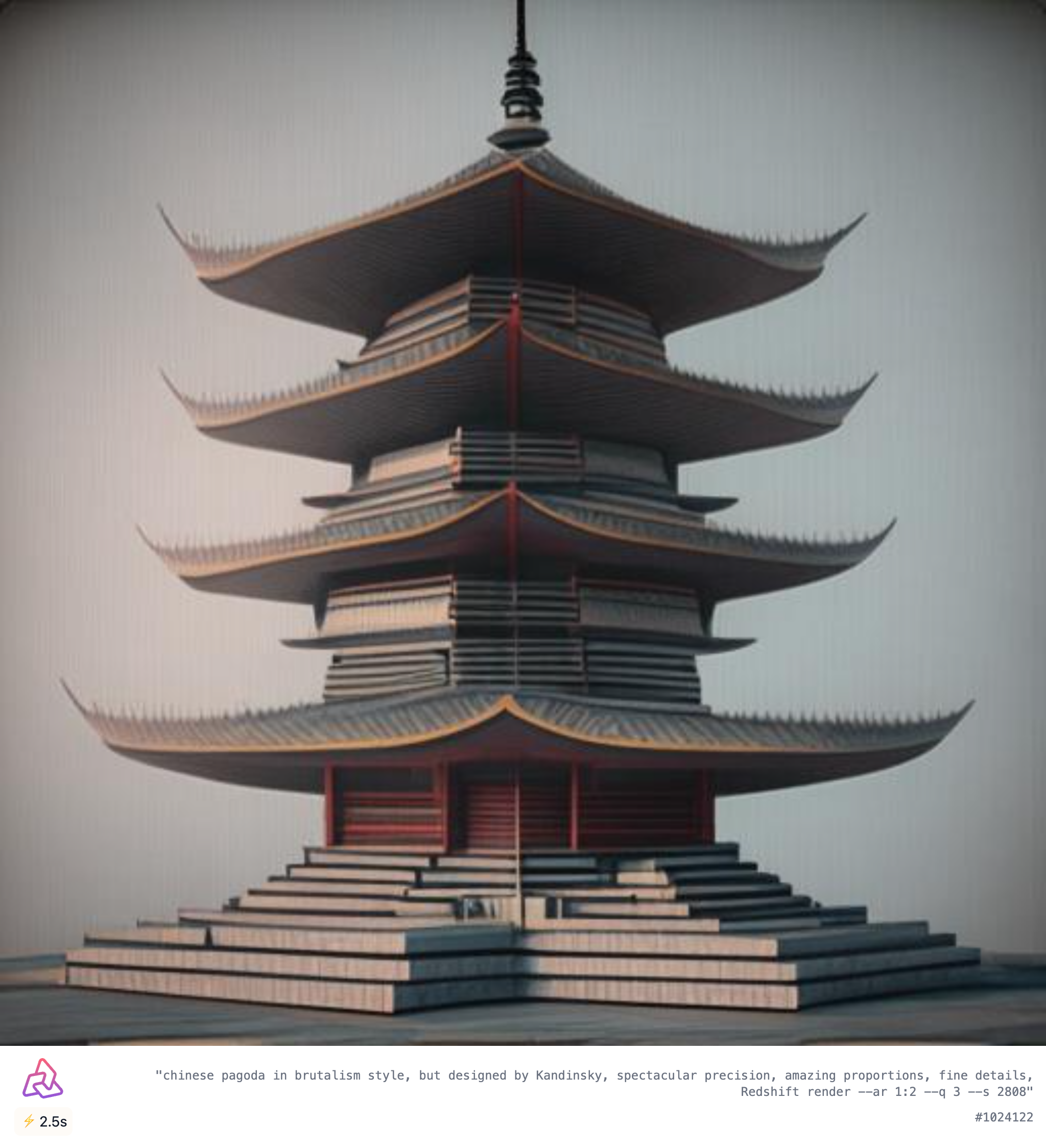

We're excited to announce that the accelerated API endpoint for Kandinsky is now available on Segmind. We invite you all to experience the revolutionary capabilities of Kandinsky 2.1 and harness its power to bring your imaginative image concepts to life.