How to get the most out of ControlNet Openpose

Learn how to use The ControlNet Openpose model, a purpose-built Stable Diffusion model, to help you replicate human poses from a single image.

The ControlNet Openpose model is an exciting and powerful advancement in cutting-edge AI-powered image generation tools. As we delve further into popular digital realms like animation, gaming, fitness, fashion, and virtual reality, ControlNet Openpose holds the potential to unlock new possibilities for creatives and developers alike.

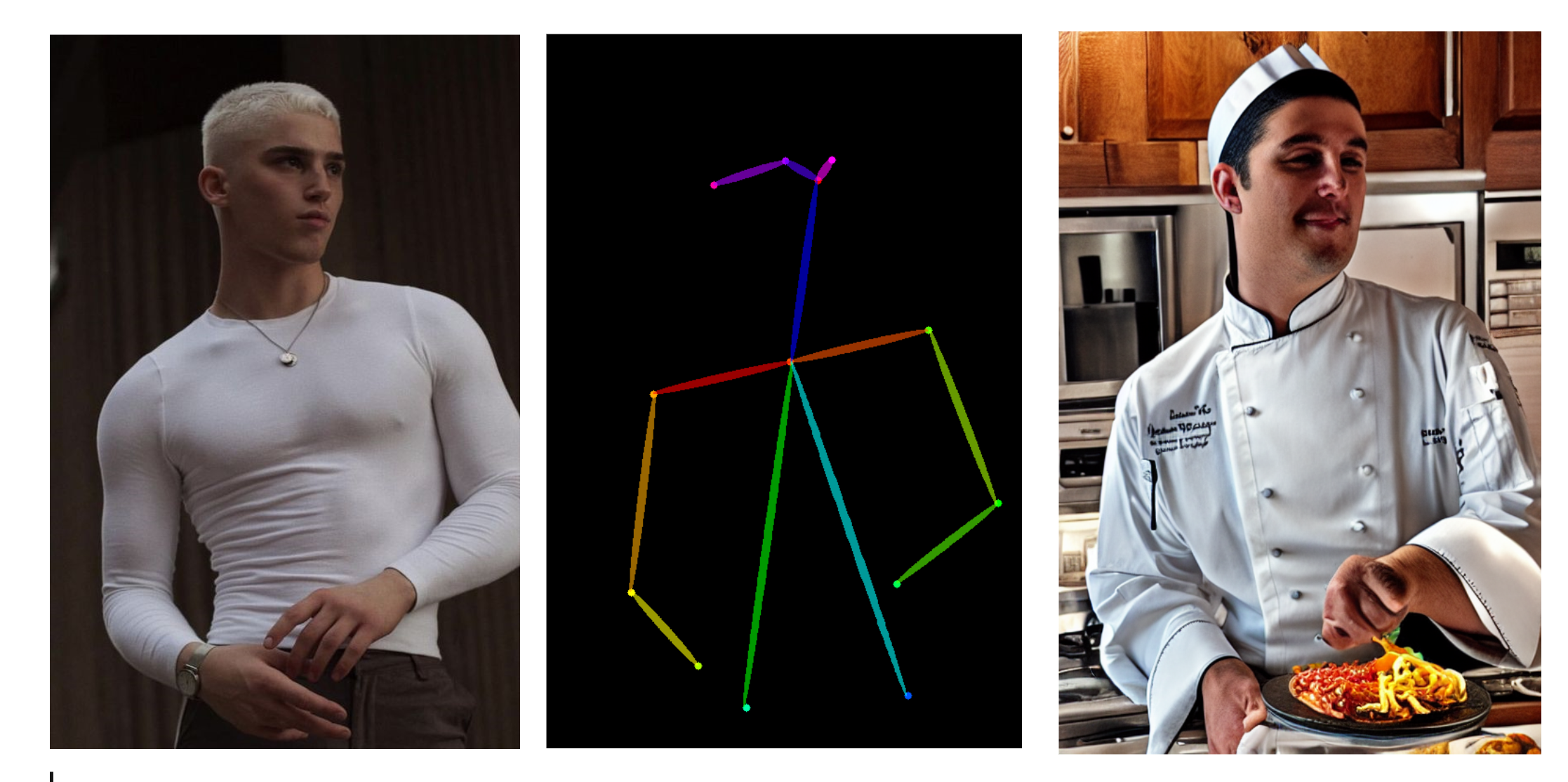

This groundbreaking model combines the power of OpenPose, a swift and efficient human keypoint detection model, with the flexibility of ControlNet, a neural network structure that enables conditional inputs. The result? A powerful model that revolutionizes the way we perceive and replicate human poses from a reference image while maintaining creative freedom in other visual aspects of the generated image like background, clothing, and hairstyles.

ControlNet Openpose: Origins

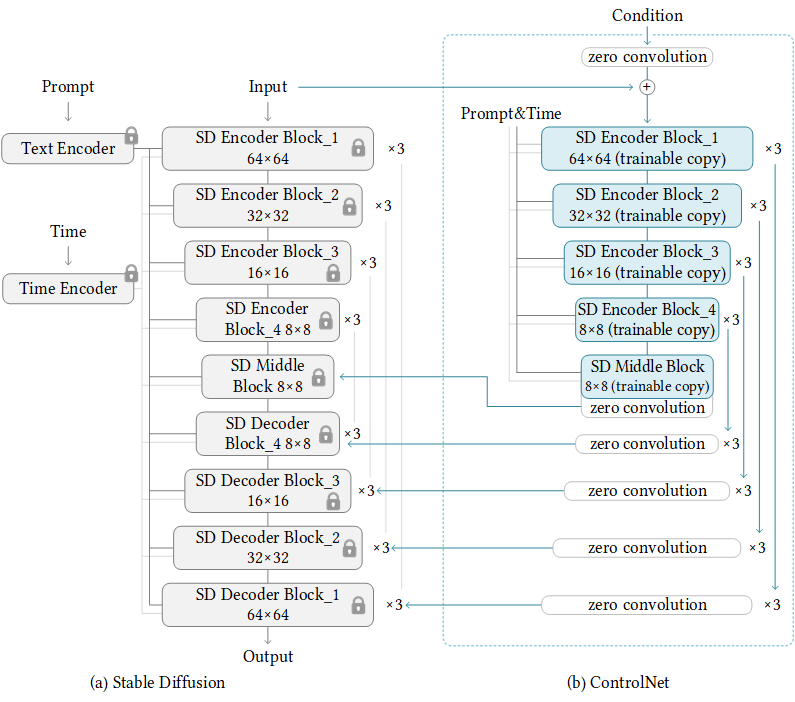

The origins of the ControlNet Openpose model can be traced back to the iconic ControlNet model, first introduced in a research paper authored by Lvmin Zhang and Maneesh Agrawala titled "Adding Conditional Control to Text-to-Image Diffusion Models".

How does ControlNet work?

ControlNet is a neural network model to control Stable Diffusion models. It is inherently flexible and can be used along with any Stable Diffusion model.

ControlNet enhances diffusion models by introducing conditional inputs like edge maps, segmentation maps, and key points. The learning process of ControlNet is remarkably robust, making it a practical and versatile solution for different applications, even with limited training datasets. Additionally, the model can be trained on smaller personal devices or scaled up with powerful computation clusters to handle large amounts of data—we're talking millions and billions.

How does ControlNet Openpose work?

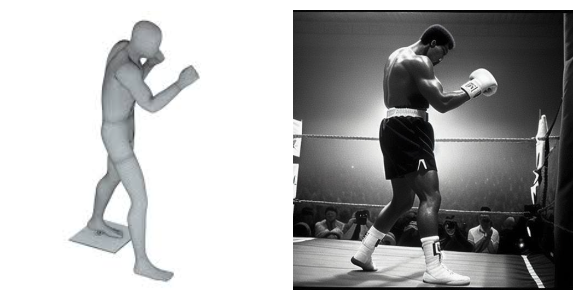

The technical prowess of ControlNet Openpose lies in the OpenPose model, which excels at detecting intricate details of human poses such as positions of hands, legs, and the head, resulting in accurate human pose replications.

ControlNet transforms these keypoints into a control map. This map, along with a text prompt, is fed into the Stable Diffusion model to generate images replicating the original pose and also giving users the creative freedom to fine-tune other attributes like clothing, hairstyles, and backgrounds.

Advantages of ControlNet Openpose

The ControlNet Openpose model stands apart from traditional pose imitation methods for its versatility and flexibility. Its combination of OpenPose and ControlNet allows for precise pose extraction and the creative freedom to manipulate other elements of the generated image.

The model's robust learning process, reliable performance, and ability to perform even with limited training data make it easily accessible to developers and researchers with varying computational resources. Further, its adaptable nature allows for experimentation and fine-tuning to match specific use cases.

Applications of ControlNet Openpose

ControlNet Openpose finds application across various use cases and industries, ranging from entertainment to healthcare and beyond. Its capacity to accurately replicate human poses from reference images opens up possibilities for more realistic animations, improved sports training, better physical therapy, enhanced fashion design, and more immersive virtual reality experiences.

Here are some key scenarios where the model truly shines:

- Animation and Gaming: ControlNet Openpose enables the creation of realistic character movements by accurately replicating human poses from reference images. Game developers can add life-like character interactions and build more immersive gaming experiences.

- Fitness and Sports: Athletes and sports professionals benefit from the ControlNet Openpose's ability to analyze and replicate exact poses, aiding in training and injury prevention. Coaches can use the generated images for visual cues and feedback, helping athletes refine their techniques and improve performance.

- Physical Therapy: By replicating poses for various exercises and treatments, ControlNet Openpose can play a significant role in physical therapy to the benefit of patients with varying treatments. Physical therapists can create personalized exercise programs and monitor patients' progress more effectively.

- Fashion and Apparel Design: Designers can create virtual models with accurate human poses for fitting and design visualizations, leading to more precise clothing design and cost-cutting production processes.

- Virtual Reality: ControlNet Openpose enhances the immersive experience by making interactions more natural, with realistic human movements.

Get Started with ControlNet Openpose

Running the ControlNet Openpose model locally with the necessary dependencies can be computationally exhaustive and time-consuming. That’s why we’ve created free-to-use AI models like ControlNet Openpose and 30 others.

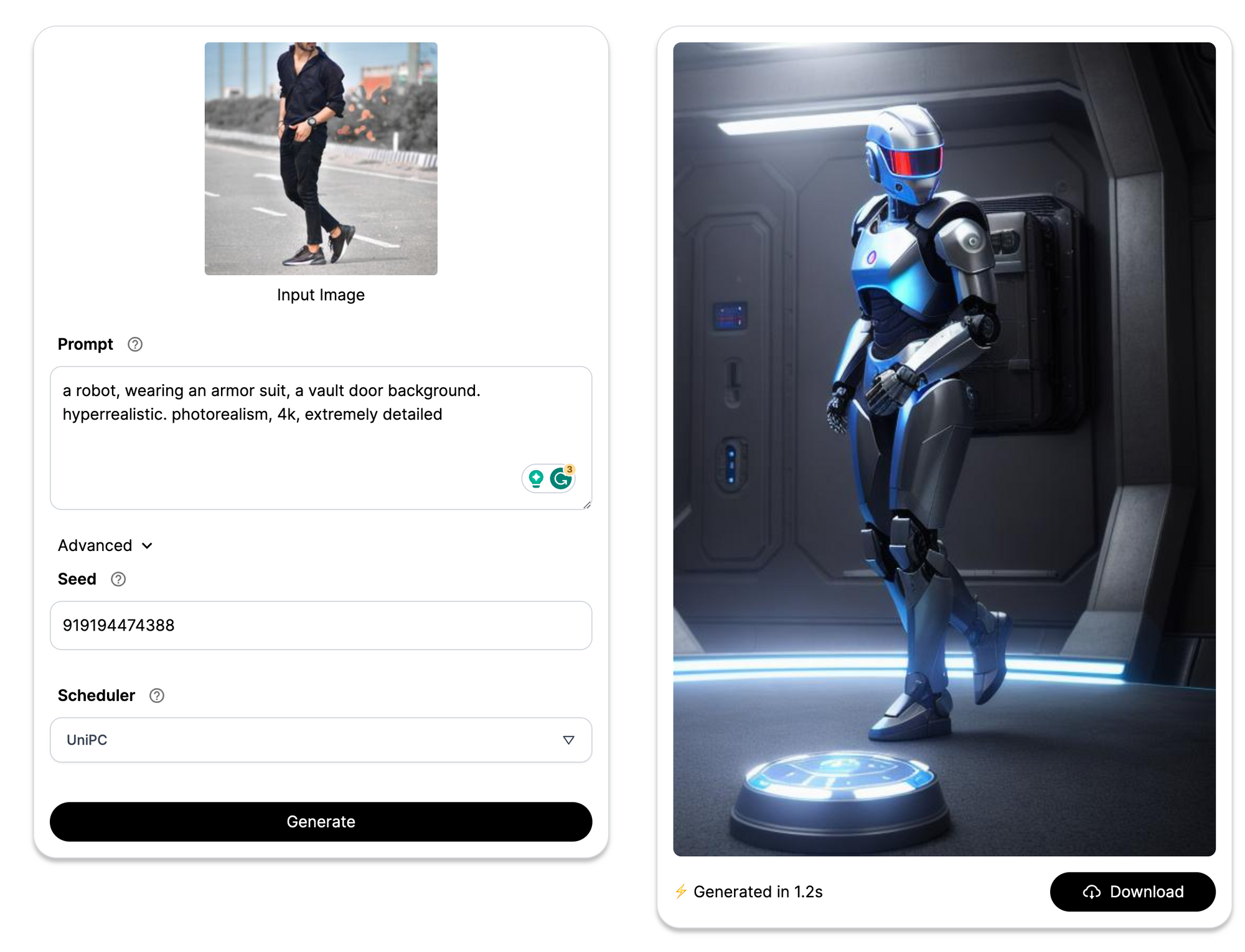

To get started, follow the steps below-

- Create your free account on Segmind.com

- Once you’ve signed in, click on the ‘Models’ tab and select ‘ControlNet Openpose'

- Upload the image with the pose you want to replicate

- Enter a text prompt to specify any other attributes you want to control, like clothing, features, background, or image style

- Click ‘Generate’

- Witness the magic of ControlNet Openpose in action!

ControlNet Openpose license

The ControlNet Openpose model is licensed under the CreativeML Open RAIL-M license, a form of the Responsible AI License (RAIL), designed to promote both open and responsible use of the model. For your modifications, you may add your own copyright statement, and provide additional or different license terms. Do remember you are accountable for the output you generate using the model, and no use of the output can contravene any provision as stated in the CreativeML Open RAIL-M license.