Latest Seedance News: The Features Reshaping AI Video Creation in 2026

Stay ahead with the latest Seedance news. Explore feature updates on rendering tiers, audio-visual generation, and benchmarks. Use them in production workflows.

You keep seeing Seedance news surface in updates and community threads, but the details never feel complete. Features appear promising, yet it is hard to tell what changed, what is stable, and what still needs work.

When clarity is missing, experimentation slows down. You hesitate before committing Seedance to production, and questions about reliability or control quietly grow. That includes whether the limitations stem from design choices or moderation policies.

This matters because Seedance is not a side project. As a core product from ByteDance, feature updates can affect how you plan workflows, allocate budgets, and ship media-driven experiences at scale. Understanding the latest updates, beyond Seedance Wikipedia summaries, helps you build with confidence.

Quick Takeaways

- Seedance now offers Lite, Pro Fast, and Pro tiers with variable durations, letting you balance speed, cost, and fidelity at each stage of production.

- The launch of Seedance 1.5 Pro enables true audio-visual generation. Audio, dialogue, camera motion, and visuals are generated together to improve lip sync, timing, and narrative coherence across languages and use cases.

- Autonomous camera logic, stronger semantic understanding, and expressive sound design support ads, short films, games, and branded storytelling.

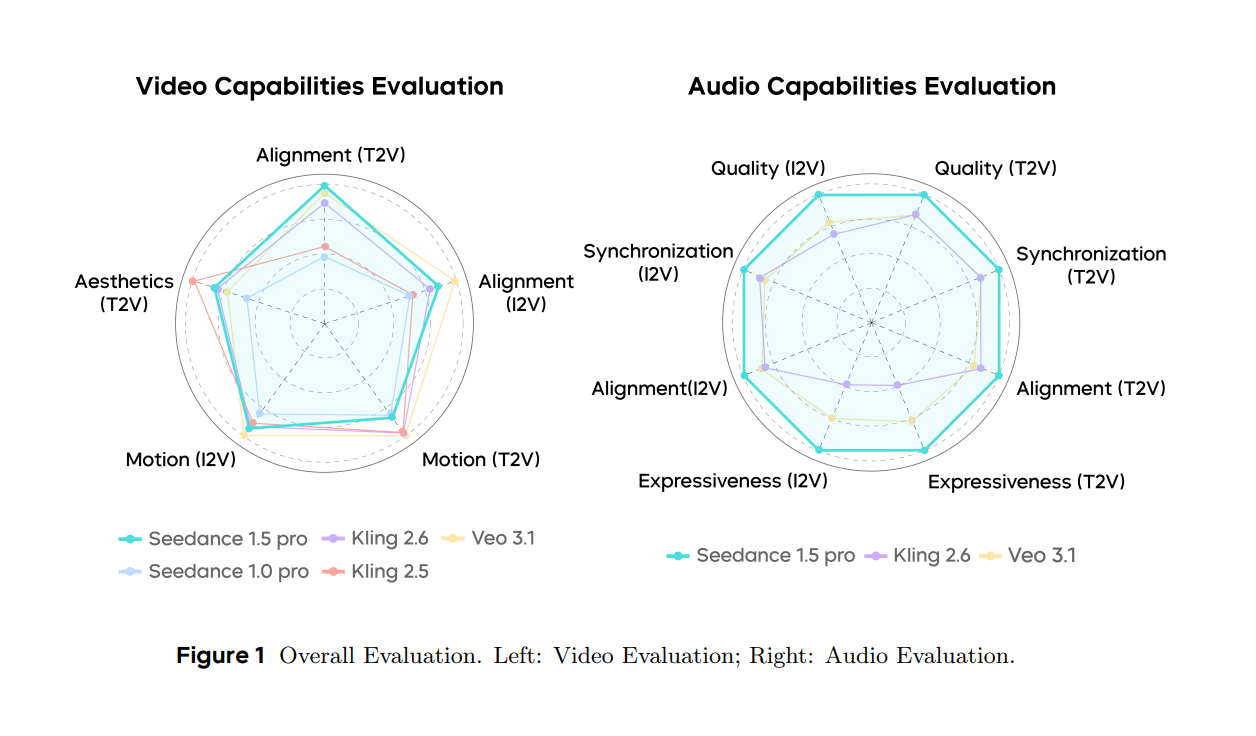

- Performance is benchmarked, not assumed. SeedVideoBench 1.5 shows strong prompt adherence, audio-visual sync, and voice realism, with clear limits around motion density and clip length.

- With PixelFlow, transparent pricing, and post-processing models, Segmind makes Seedance updates usable in production-ready pipelines.

Seedance: A Quick Overview Before the Updates

Seedance is ByteDance’s in-house generative media system built for high-quality video creation at scale. It focuses on text-to-video and image-to-video generation, with strong emphasis on motion realism, shot consistency, and cinematic sequencing.

Seedance supports multi-shot narratives, consistent characters across scenes, and controlled camera motion. These capabilities make it relevant for ads, short films, branded content, and platform-scale media generation.

What matters more for you is how Seedance behaves in real workflows. It is opinionated, optimized for scale, and built with moderation and performance trade-offs that directly affect iteration speed. That context is essential before diving into the latest Seedance updates, because each new feature reflects how ByteDance is positioning Seedance for users.

Flexible Rendering Tiers and Smarter Pricing

This major Seedance update focuses on one thing you care about most: control. You now decide how fast, how detailed, and how expensive each generation should be, instead of adapting your workflow to a single rigid model.

Smarter Rendering Tiers for Real Workflows

Seedance now lets you choose the rendering tier per generation, based on speed, quality, and cost.

Available tiers

- 1.0 Lite: Optimized for fast 720p outputs and early-stage experimentation

- 1.0 Pro Fast: Balanced 1080p quality with shorter turnaround times

- 1.0 Pro: Maximum 1080p fidelity when detail and polish matter most

Why this matters: You can prototype quickly, validate concepts, and only switch to higher tiers when visuals are locked. That saves time and computing.

Also Read: Seedance 1.0 Prompt Guide for Stabilized Cinematic Output

Flexible Durations with Transparent Pricing

You are no longer locked into a fixed clip length. Seedance now supports variable video durations from 3 to 12 seconds, with pricing adjusted accordingly.

What changed?

- Short clips cost less.

- Longer clips scale predictably.

Quick Model Suitability

Use case | Recommended tier |

|---|---|

Prompt testing and ideation, Social media, stylized animation | 1.0 Lite |

Marketing, storytelling, A/B testing | 1.0 Pro Fast |

Cinematic or branded content (Commercials, branded films) | 1.0 Pro |

Key Insight: Seedance is moving toward modular, workflow-first control that better aligns with how developers, creators, and enterprise teams actually work.

Also Read: Seedance 1.0 Pro Fast Now Available on Segmind

Try Seedance 1.0 Pro Fast on Segmind when you need a reliable balance of speed and quality.

Introduction of Seedance 1.5 Pro: Bringing Native Audio-Visual Generation

Seedance 1.5 Pro marks a structural shift in how video is generated. Instead of producing visuals first and layering sound later, the model now creates audio and video together. This foundational change expands Seedance beyond purely visual generation.

How Seedance 1.5 Pro Raises the Performance Ceiling

Seedance 1.0 focused on reliability by improving motion stability. Outputs became usable. Seedance 1.5 Pro targets expressive quality. Here's how:

1. Precise Audio-Visual Synchronization Across Languages and Dialects

Seedance 1.5 Pro introduces a much higher level of audio-visual consistency during generation. This accuracy holds even in close-ups and emotionally charged dialogue, where minor timing errors are usually most noticeable.

What improves?

- More accurate lip movement alignment

- Natural intonation that matches facial expression

- Performance rhythm that stays consistent with scene pacing

The model natively supports multiple global languages and regional dialects. It preserves vocal pitch, loudness, and timing, accent nuances, and emotional tension, instead of flattening speech into a generic delivery.

Key Takeaway: You no longer need to re-time dialogue, tweak lip sync, or compensate for awkward pauses. That reduces post-production effort and makes narrative content easier to scale.

2. Cinematic Camera Control and Dynamic Tension

Seedance 1.5 Pro introduces autonomous cinematography, which decides how the camera should move based on narrative context. Supported behaviors include:

- Continuous long takes

- Dolly zooms and push-ins

- Rapid pans and controlled cuts

- Cinematic transitions and color grading

3. Stronger Semantic Understanding and Narrative Coherence

The model now interprets story context, not just isolated prompts.

What improves?

- Emotional progression across shots

- Logical sequencing of scenes

- Consistent tone across audio and visuals

Now, let's look at some particular use-case scenarios where the 1.5 Pro model shines.

Use Case 1: Cinematic Storytelling with Emotional Detail

Seedance 1.5 Pro excels at close-ups and subtle expression. It interprets complex emotional states and translates them into expressive visual storytelling. This becomes most visible in close-up shots. Even without dialogue:

- Facial micro-expressions remain stable.

- Emotional tension builds gradually.

Example: In a grounded, contemporary US setting, the model infers emotional context directly from the prompt and renders a multi-layered emotional transition. A character processes loss quietly, with expression, lighting, and ambient sound evolving in sync.

Prompt: A quiet American desert town at sunrise. A handheld camera follows a woman in her early thirties as she walks past an abandoned gas station. The shot tightens into a close-up as she pauses, eyes heavy with restrained grief. As warm morning light spreads across the scene, her expression subtly shifts toward calm resolve. Fine film grain, shallow depth of field, and natural ambient wind sound reinforce the stillness.

Seedance 1.5 Pro can also construct multi-shot narrative sequences from a single prompt, while preserving emotional continuity and story logic across cuts.

Prompt 1 Video

Example: In a slice-of-life scene set in the US, the model generates a complete emotional arc across multiple shots, with dialogue, sound, and visuals evolving naturally.

Prompt: Summer evening at a small-town county fair in the United States. String lights glow above a crowded midway. The camera starts wide, capturing Ferris wheel lights and distant music, then slowly zooms in on two teenagers near a food stand. A close-up shows the girl gathering courage before speaking. She softly confesses her feelings. The surrounding crowd noise fades as they share a quiet embrace, with fairground sounds and music blending into a warm, cohesive atmosphere.

Why This Matters: You can move beyond visually impressive but emotionally shallow clips. This is essential for narrative films, short dramas, branded storytelling, and character-driven content.

Prompt 2 Video

Use Case 2: Stylized Comedy and Multilingual Performance

The model supports expressive speech across languages and accents. It handles languages such as English, Spanish, Japanese, Korean, Indonesian, and Chinese with natural delivery, rather than flattened or robotic speech.

In Chinese-language scenarios, the model goes further. It can simulate regional accents and dialects, including Sichuanese and Cantonese, while preserving their unique humor and emotional tone.

Example: In a short-form character-led clip, it generates a complete comedic beat in Sichuan dialect, with accurate timing between speech, expression, and camera movement.

VIDEO:

Prompt: Realistic, high-quality video. Inside a small street-side convenience shop, a delivery robot pauses mid-task and turns toward the camera. In Sichuanese, it complains: “我跟你说嘛,一天到晚跑来跑去,电都要跑没得了。”(“I’m telling you, running around all day like this, I’m about to run out of battery.”) After a brief pause, it adds with a tired sigh: “结果他们买得最多的还是泡面和功能饮料,安逸得很嘛。” (“And what do they buy the most? Instant noodles and energy drinks. Living the easy life, huh.”) The camera slowly pushes in. The robot leans closer and delivers the punchline: “说实话哦,我不是送货的,我是给大家续命的。” (“Honestly, I’m not a delivery robot. I’m here to extend everyone’s lifespan.”) Soft motor sounds and shop ambience remain tightly synced with the delivery.

Who can use this (and where):

- Developers generating character-based ad creatives

- Marketing teams localizing humor across regions

- Creators building short skits and social clips

- Product teams testing branded regional mascots or virtual presenters

Use Case 3: High-Motion Scenes with Professional Camera Logic

Seedance 1.5 Pro introduces autonomous camera scheduling, which allows the model to plan and execute complex camera movements without manual sequencing. This is especially important in scenes that demand precision, speed, and product focus.

The model handles fast or continuous motion smoothly while keeping the subject framed correctly throughout the shot.

Example: In a commercial-style product video, Seedance 1.5 Pro applies professional camera logic to maintain visual clarity and momentum.

VIDEO

Prompt: Commercial-style video set inside a modern, minimalist mansion at sunset. Warm light reflects across a polished marble floor. A premium black robotic floor cleaner moves autonomously across the room, emitting a soft blue scanning light. The camera begins with a slow forward push, then transitions into a low-angle tracking shot at floor level, closely following the machine’s movement. Subtle mechanical sounds blend with a calm female AI narration: “It’s the dust you can’t see that causes allergies. With laser dust detection and intelligent power adjustment, this is how you walk barefoot with confidence again.”

This capability is ideal for:

- Product promo videos and launch teasers

- Performance-focused ads and explainer clips

Use Case 4: Gaming and Ambient Sound Design

The 1.5 Pro model demonstrates a strong understanding of ambient sound effects and musical atmosphere, generating them in direct response to on-screen visuals. The model layers background audio, environmental effects, and music to create spatial awareness.

Example: In a short gameplay-style clip designed for an indie game promo, it synchronizes motion, camera behavior, and sound effects in real time.

VIDEO

Prompt: Pixel-art side-scrolling game style. A hero runs across rooftops at dusk, jumps between platforms, and lands with momentum. The camera smoothly tracks the character’s movement. Each jump triggers an 8-bit sound effect, landings hit with heavier audio cues, and a retro soundtrack adapts in tempo as the action speeds up.

This capability is handy for:

- Game developers creating gameplay trailers

- Studios prototyping in-game cutscenes

- Marketing teams producing teaser clips

Inside the Architecture: Why Seedance 1.5 Pro Works

- Multimodal Architecture: It uses one shared model for audio and video generation. Sound and visuals influence each other during creation, which keeps timing, meaning, and emotion aligned. Training on large mixed-modality datasets helps the model stay consistent across complex prompts and different content types.

- Multi-stage data pipeline focused on coherence: Training data is structured in stages to prioritize motion expressiveness, audio-video consistency, and contextual understanding. Video captions are richer, and audio descriptions are part of the learning process.

- Audio-visual fine-tuning with human feedback: After initial training, the model is refined using high-quality audio and video examples and human feedback (RLHF). This improves motion quality, visual aesthetics, and audio realism.

- Efficient inference acceleration: Through model distillation and infrastructure optimizations such as quantization and parallel execution, the model generates results up to 10x faster while preserving quality and consistency.

Performance Benchmarks and Reality Check

To objectively evaluate Seedance 1.5 Pro, ByteDance introduced SeedVideoBench 1.5, a benchmark developed in collaboration with film directors and technical experts. The evaluation focuses on both visual quality and audio performance rather than treating them as separate scores.

Where Seedance 1.5 Pro stands out

- Strong prompt adherence for actions, camera work, and scene intent

- Smooth execution of complex camera movements aligned with reference styles

- High audio-visual synchronization with precise timing and rhythm

- Natural, less robotic-sounding voices

- Realistic sound effects with convincing spatial depth

Reality check: Motion stability still has room to improve in demanding scenarios. Multi-character dialogue and singing also need refinement. Moreover, as of January 2026, the maximum supported length is 15 seconds, which defeats the purpose of longer workflows.

Also Read: Seedance 1.0 Pro vs Veo 3: Which AI Video Model Wins for Pros

How Segmind Helps You Use Seedance Effectively

Segmind is a cloud-based media automation platform built to apply Seedance updates in real production workflows, not just test features in isolation. As Seedance adds new tiers, durations, and audio-visual capabilities, Segmind helps you use them without workflow friction.

Here’s how it supports practical Seedance usage:

- Access to Seedance models within one platform: You can work with Seedance variants from a single serverless API-based platform. Switching between tiers does not require tool changes or prompt rewrites.

- Cost-efficient scaling with transparent pricing: Seedance generations on Segmind average ~$0.251 per generation, which makes experimentation affordable. You pay only for what you generate.

- PixelFlow for staged video workflows: PixelFlow lets you design multi-step pipelines where different Seedance tiers handle different stages. You can explore ideas early, then move to higher-quality outputs.

- Post-video extension using Segmind’s model ecosystem: After generation, you can add captioning, voice layers, or post-processing models if required, using 500+ models and built-in templates.

Wrapping Up

AI video creation is no longer about generating a single impressive clip. It involves control, iteration, and reliability across an entire workflow. The latest Seedance news and updates show a clear shift in that direction. That broadly includes flexible rendering tiers and variable durations, native audio-visual generation, and cinematic camera logic.

Understanding these changes helps you make better decisions. You know when to prioritize speed, when fidelity matters, and how audio, motion, and narrative now work together instead of in isolation. This is where Segmind fits naturally. Instead of experimenting in fragments, you can use Seedance inside structured pipelines. With PixelFlow, granular controls, and cost-efficient scaling, you move from testing features to shipping repeatable results.

If you wish to turn the recent Seedance news into practical, production-ready workflows, explore Seedance on Segmind.

FAQs

1. Is Seedance 1.5 Pro suitable for production use, or is it still experimental?

Seedance 1.5 Pro is production-capable for ads, short films, branded content, and narrative clips. You should still test motion-heavy or multi-character scenes carefully, as scenes with extreme motion density or singing may require additional iteration.

2. Is Seedance meant to replace a full creative pipeline?

Seedance works best as a generation layer, not a complete pipeline replacement. You still need orchestration, asset management, review loops, and post-processing tools to ship production-ready media at scale.

3. How should teams integrate Seedance into automated workflows?

You should treat Seedance as one node in a larger system. Pair it with workflow tools that manage retries, prompt variations, evaluation rules, and downstream editing to achieve reliable, scalable results.

4. How does Seedance 1.5 Pro handle moderation and content limits?

Seedance applies platform-level safety filters inherited from ByteDance policies. Specific prompts may be constrained without detailed feedback, so you should design workflows that allow prompt variation and fallback models when testing sensitive content.